Google has changed a lot in 20 years. What started as an index of “just” a few million pages is now reaching into the hundreds of billions; what was once a relatively simple (if very clever!) search engine is now an impossibly complex brew of machine learning, computer vision, and data science that finds its way into the daily lives of most of the planet.

Google held a small press event in San Francisco today to discuss what’s next, and what it saw as the “Future of Search”.

Here’s everything they talked about:

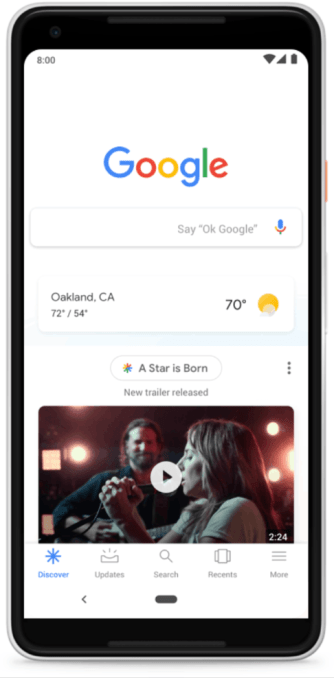

Google Discover

The famously minimalist Google.com homepage is about to get a bit more crowded (at least on mobile.)

Google says that 1 in 8 queries in a given month are repeats — a user returning to search on a topic they care about. With that in mind, it’s going to start highlighting these topics for you before you start your search.

Google Feed (the content discovery news feed it’s been building out in the dedicated Google App and on the Android home screen) is being rebranded as “Discover” and will now live underneath the Google.com search bar on all mobile browsers.

Discover will highlight news, video, and information about topics Google thinks you care about — like, say, hiking, or soccer, or the NBA. If you want a certain topic to show up more or less, there’s a slider to adjust accordingly.

It’ll start rolling out “in the next few weeks”

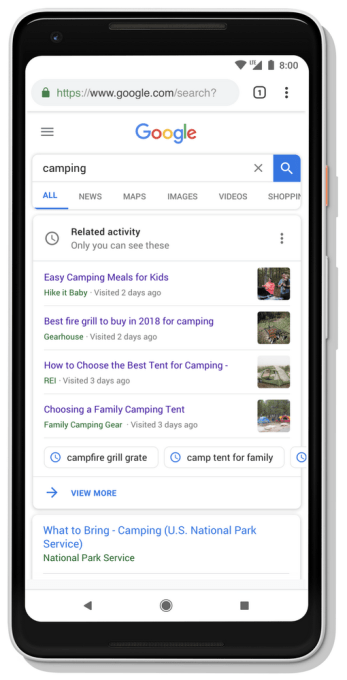

Recalling past queries

In the same vein: Google will now learn to recognize when you’re returning to a topic you’ve searched for before, and try to start back up where you left off. When returning to a search topic, you’ll now see a card at the top of the results that’ll offer up a list of the pages you clicked through to before, and relevant follow-up queries people tend to search for next.

While Google keeping track of what you searched for is nothing new, finding that info generally meant digging through settings pages to find your history. With this, Google is attempting to play something that otherwise seems a bit creepy into a front page feature.

(And to answer what I imagine will be just about everyone’s first question: Google’s Nick Fox says you can remove the card, or “opt out of seeing it all together”.)

Dynamic Organization

Trillions of searches later, Google knows what you’re probably looking for, and what you’ll be looking for next. And they can get pretty specific about it.

To use their example: if you’re searching for “Pug”, you’re probably looking for characteristics of the breed, or for images of well-known pugs. People searching for a longer haired breed like a Yorkshire Terrier, meanwhile, are often interested in things like grooming details — even if it’s not the first thing they search for.

With this in mind, Google’s knowledge graph will now dynamically generate cards for a given topic and present them at the top of the results page — basically, an all-in-one info packet of everything it thinks you’re looking for, or might look for next.

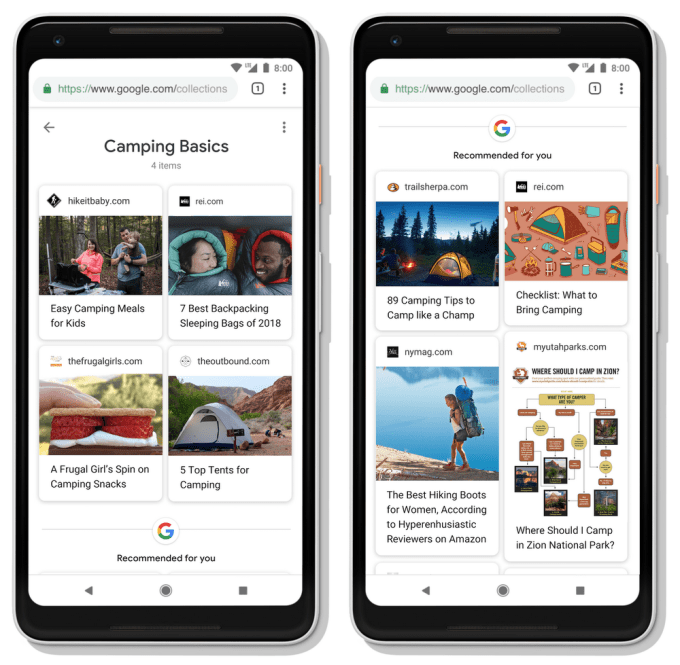

Collections

In a move that feels pretty Pinteresty, you’ll now be able to save search results into “collections” for later perusal. Google will look for patterns in your collections, and toss up suggested pages when it finds an overlap.

Stories

Snapchat has its stories. Facebook has stories. Instagram? Stories. Even Skype tried it for a while.

Now Google is “doubling down” (their words) on stories. AI will generate stories built up from articles, images, and videos on a search topic (starting with notable people “like celebrities and athletes) and incorporate them into search results.

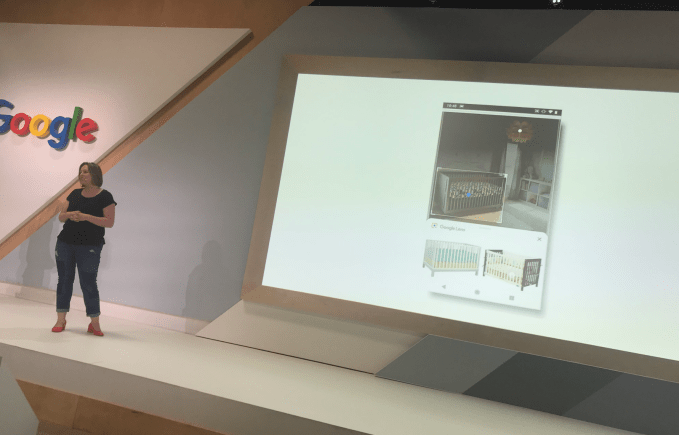

Google Images Upgrades

Google Images is picking up support for Google Lens — the company’s computer vision-heavy solution for figuring out exactly what is within an image. Their example: in a search result for “nursery”, Google Lens could help to identify a specific type of crib or bookcase that you’ve highlighted in an image.

Read Full Article

No comments:

Post a Comment