Google Photos already makes it easy for users to correct their photos with built-in editing tools and clever, A.I.-powered features for automatically creating collages, animations, movies, stylized photos, and more. Now the company is making it even easier to fix photos with a new version of the Google Photos app that will suggest quick fixes and other tweaks – like rotations, brightness corrections, or adding pops of color, for example – right below the photo you’re viewing.

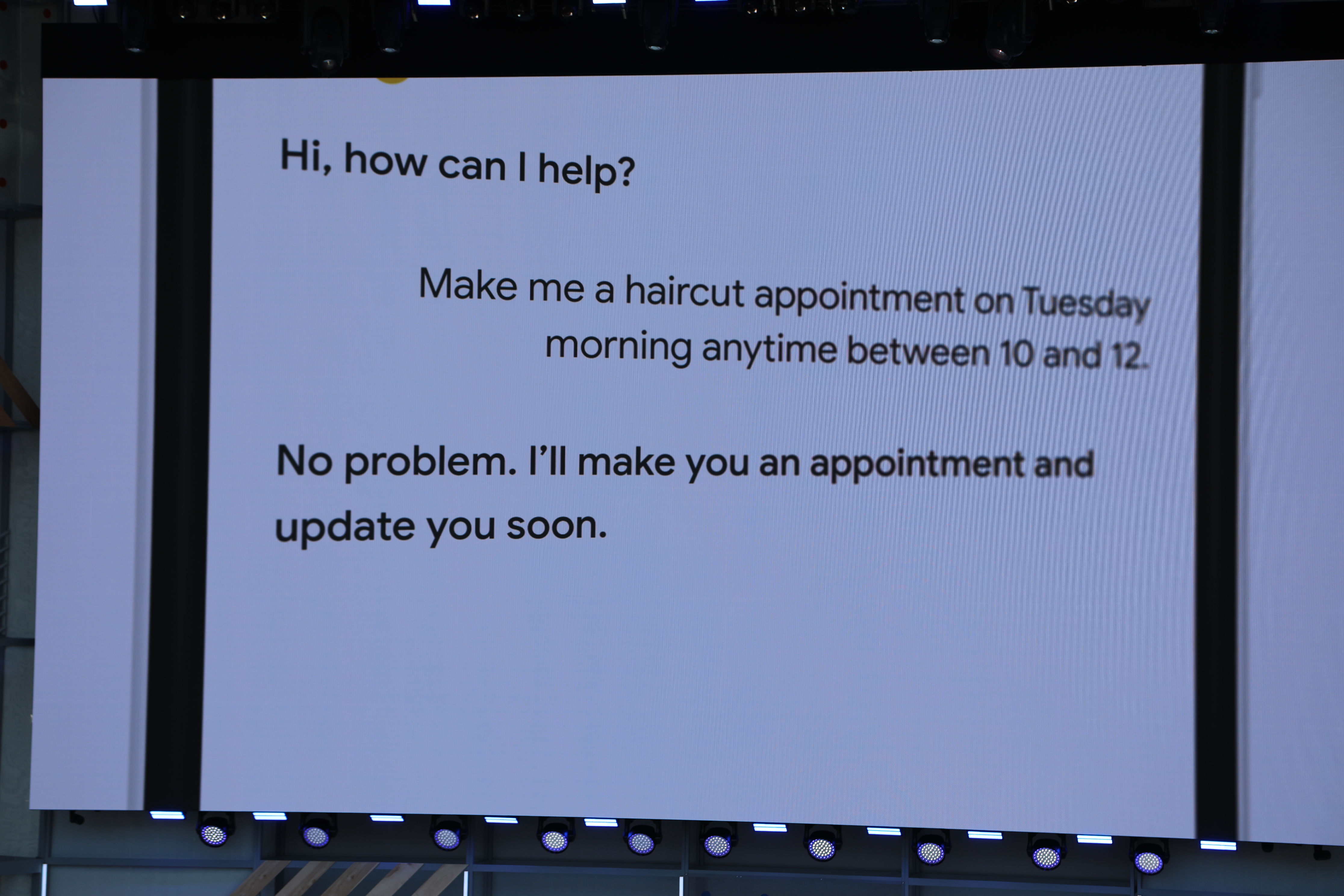

The changes, which are being introduced on stage at the Google I/O developer conference today, are yet another example of this year’s theme of bringing A.I. technology closer to the end user.

In Google Photos’ case, that means no longer just hiding the A.I. away within the “Assistant” tab, but putting it directly in the main interface.

The company says that the idea to add the fix suggestions to the photos themselves came about because they realized this is where the app sees the most activity.

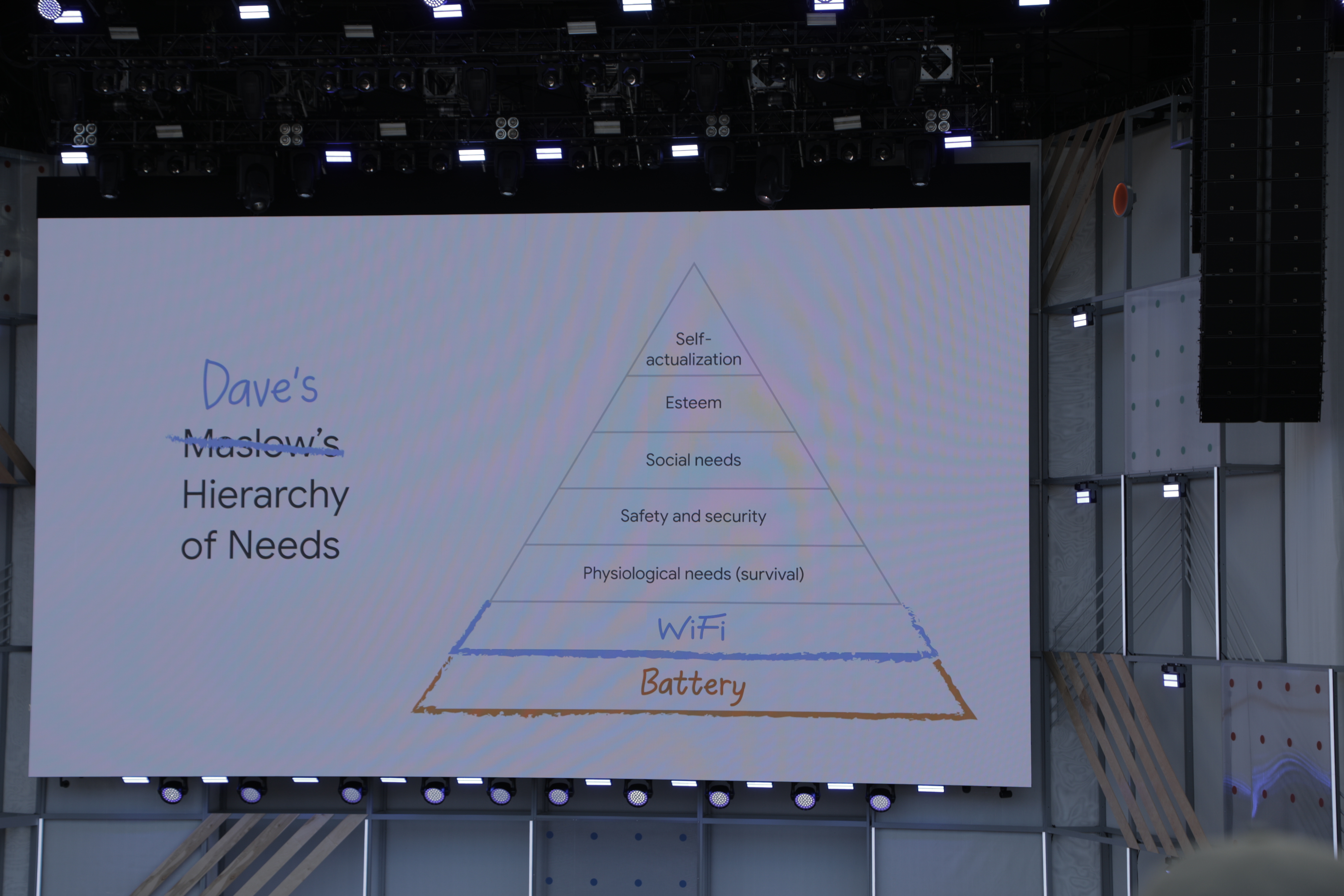

“One of the insights we’ve had as Google Photos has grown significantly over the years is that people spend a lot of time looking at photos inside of Google Photos,” explained Google Photos Product Lead, Dave Lieb, in an earlier interview with TechCrunch about the update. “They do it to the tune of about 5 billion photo views per day,” he added.

That got the team thinking that they should focus on solving some of the problems people see when they’re looking at their photos.

Google Photos will begin to do just that with the changes that begin rolling out this week.

For example, if you come to a photo that’s too dark, there will be a little button you can tap underneath the photo to fix the brightness. If the photo is on its side, you can press a button to rotate it. These are things you could have done before, of course, by accessing the editing tools manually. But the updated app will now just make it a one-tap fix.

Also new are tools inspired by Google’s Photoscan technology that will fix photos of documents and paperwork, by zooming in, cropping and rectifying the photo.

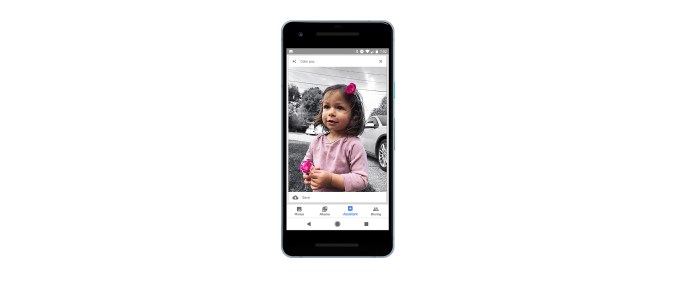

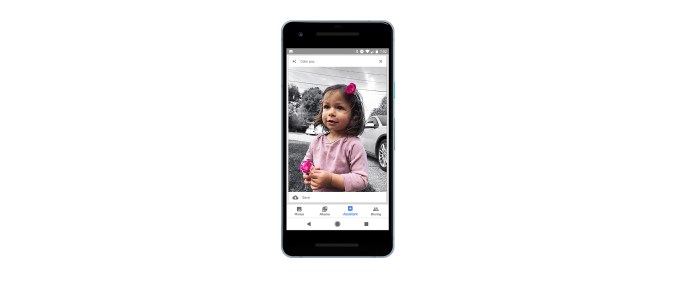

One tool will analyze who’s in the photo and prompt you to share it with them, similar to the previously launched sharing suggestions feature. Another prompts you to archive photos of old receipts. And one, called “Color Pop,” will identify when it could pop out whoever’s in the foreground by turning the background to black and white.

In addition to the new tools arriving thi week, Google is also prepping a “Colorize” tool that will turn black-and-white photos into colorized images. This tool, too, was inspired by Photoscan, as Google found people were scanning in old family photos, including the black-and-white ones.

“Our team thought, what if we applied computer vision and A.I. to black-and-white photos? Could we re-create a color version of those photos?” said Lieb. They wanted to see if technology could be trained to re-colorize images so you could really see what it was like back then, or at least a close approximation. That’s how Colorize will work, when it’s ready.

A neural network will try to infer the colors that likely work best in the photo – like turning the grass green, for example. Getting other things right – like skin tone, perhaps – could be more tricky; so the team isn’t launching the feature until it’s “really right,” they said.

The new Google Photos features were announced along with news of a developer preview version of the Google Photos Library API, that allows third-party developers to take advantage of Google Photos’ storage, infrastructure and machine intelligence. More on that is here.

Read Full Article

Read Full Article

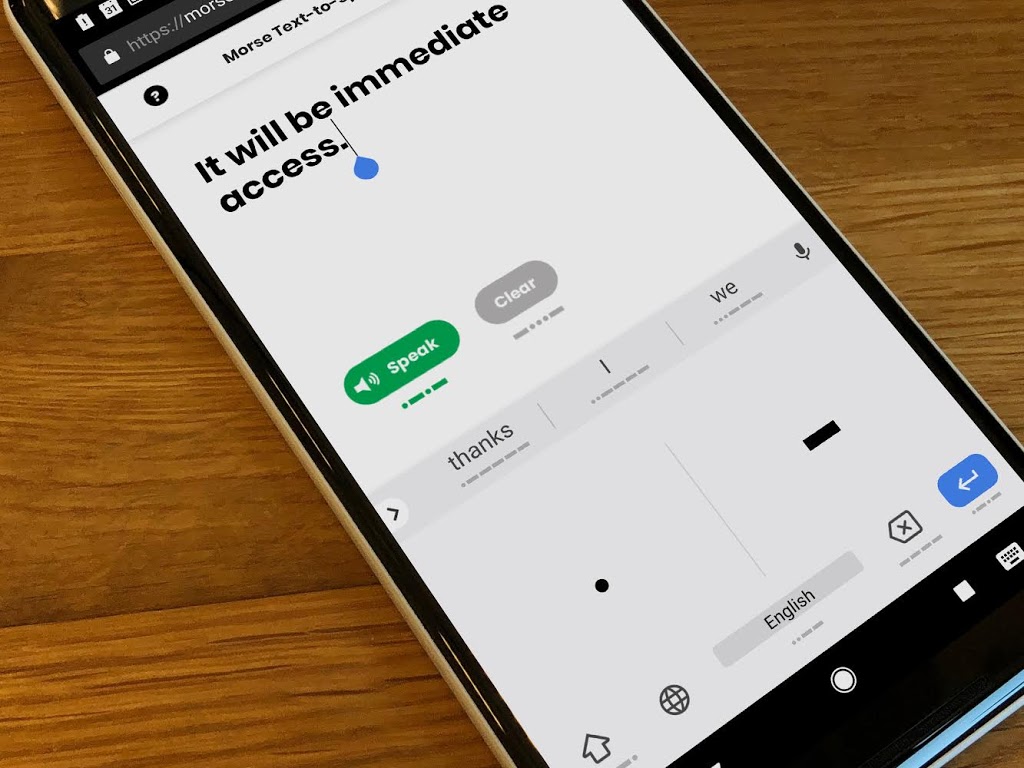

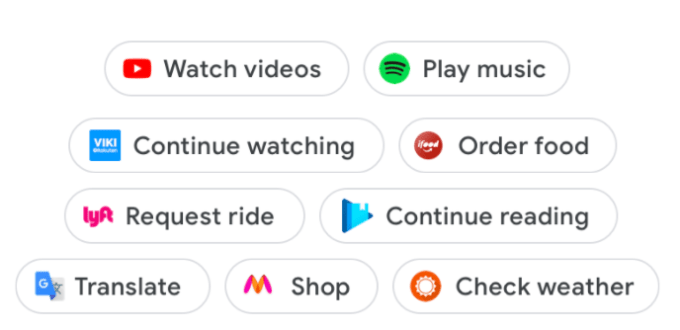

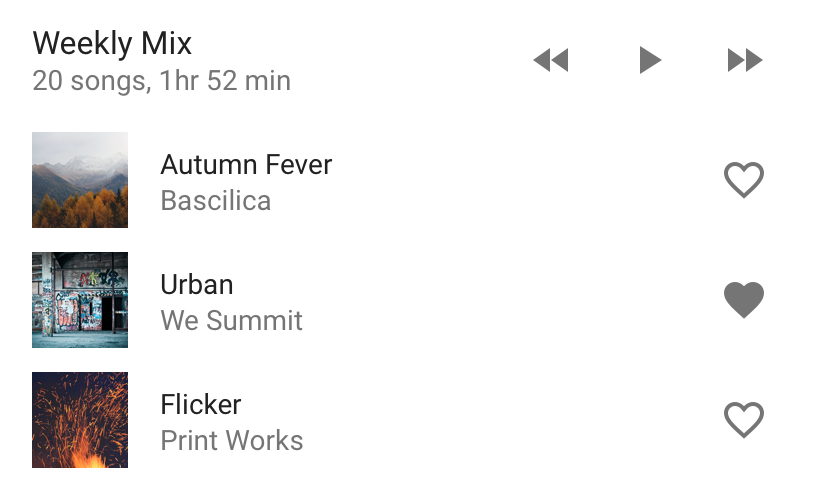

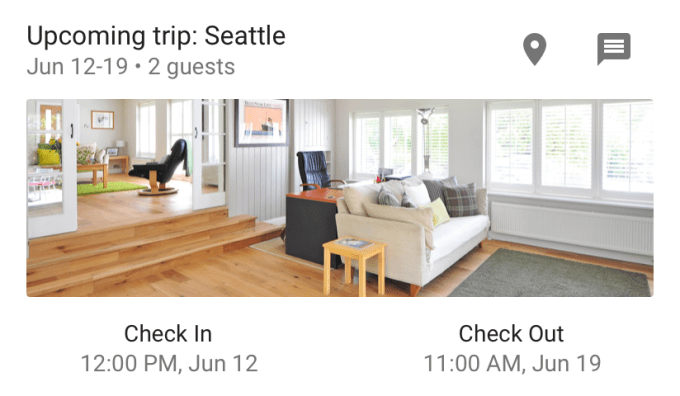

Slices is also meant to get users to interact more with the apps they have already installed, but the overall premise is a bit different from App Actions. Slices essentially provide users with a mini snippet of an app and they can appear in Google Search and the Google Assistant. From the developer’s perspective, they are all about driving the usage of their apps, but from the user’s perspective, they look like an easy way to get something done quickly.

Slices is also meant to get users to interact more with the apps they have already installed, but the overall premise is a bit different from App Actions. Slices essentially provide users with a mini snippet of an app and they can appear in Google Search and the Google Assistant. From the developer’s perspective, they are all about driving the usage of their apps, but from the user’s perspective, they look like an easy way to get something done quickly. “This radically changes how users interact with the app,” Cuthbertson said. She also noted that developers obviously want people to use their app, so every additional spot where users can interact with it is a win for them.

“This radically changes how users interact with the app,” Cuthbertson said. She also noted that developers obviously want people to use their app, so every additional spot where users can interact with it is a win for them.