UK startup Fabula AI reckons it’s devised a way for artificial intelligence to help user generated content platforms get on top of the disinformation crisis that keeps rocking the world of social media with antisocial scandals.

Even Facebook’s Mark Zuckerberg has sounded a cautious note about AI technology’s capability to meet the complex, contextual, messy and inherently human challenge of correctly understanding every missive a social media user might send, well-intentioned or its nasty flip-side.

“It will take many years to fully develop these systems,” the Facebook founder wrote two years ago, in an open letter discussing the scale of the challenge of moderating content on platforms thick with billions of users. “This is technically difficult as it requires building AI that can read and understand news.”

But what if AI doesn’t need to read and understand news in order to detect whether it’s true or false?

Step forward Fabula, which has patented what it dubs a “new class” of machine learning algorithms to detect “fake news” — in the emergent field of “Geometric Deep Learning”; where the datasets to be studied are so large and complex that traditional machine learning techniques struggle to find purchase on this ‘non-Euclidean’ space.

The startup says its deep learning algorithms are, by contrast, capable of learning patterns on complex, distributed data sets like social networks. So it’s billing its technology as a breakthrough. (Its written a paper on the approach which can be downloaded here.)

It is, rather unfortunately, using the populist and now frowned upon badge “fake news” in its PR. But it says it’s intending this fuzzy umbrella to refer to both disinformation and misinformation. Which means maliciously minded and unintentional fakes. Or, to put it another way, a photoshopped fake photo or a genuine image spread in the wrong context.

The approach it’s taking to detecting disinformation relies not on algorithms parsing news content to try to identify malicious nonsense but instead looks at how such stuff spreads on social networks — and also therefore who is spreading it.

There are characteristic patterns to how ‘fake news’ spreads vs the genuine article, says Fabula co-founder and chief scientist, Michael Bronstein.

“We look at the way that the news spreads on the social network. And there is — I would say — a mounting amount of evidence that shows that fake news and real news spread differently,” he tells TechCrunch, pointing to a recent major study by MIT academics which found ‘fake news’ spreads differently vs bona fide content on Twitter.

“The essence of geometric deep learning is it can work with network-structured data. So here we can incorporate heterogenous data such as user characteristics; the social network interactions between users; the spread of the news itself; so many features that otherwise would be impossible to deal with under machine learning techniques,” he continues.

Bronstein, who is also a professor at Imperial College London, with a chair in machine learning and pattern recognition, likens the phenomenon Fabula’s machine learning classifier has learnt to spot to the way infectious disease spreads through a population.

“This is of course a very simplified model of how a disease spreads on the network. In this case network models relations or interactions between people. So in a sense you can think of news in this way,” he suggests. “There is evidence of polarization, there is evidence of confirmation bias. So, basically, there are what is called echo chambers that are formed in a social network that favor these behaviours.”

“We didn’t really go into — let’s say — the sociological or the psychological factors that probably explain why this happens. But there is some research that shows that fake news is akin to epidemics.”

The tl;dr of the MIT study, which examined a decade’s worth of tweets, was that not only does the truth spread slower but also that human beings themselves are implicated in accelerating disinformation. (So, yes, actual human beings are the problem.) Ergo, it’s not all bots doing all the heavy lifting of amplifying junk online.

The silver lining of what appears to be an unfortunate quirk of human nature is that a penchant for spreading nonsense may ultimately help give the stuff away — making a scalable AI-based tool for detecting ‘BS’ potentially not such a crazy pipe-dream.

Although, to be clear, Fabula’s AI remains in development at this stage, having been tested internally on Twitter data sub-sets at this stage. And the claims it’s making for its prototype model remain to be commercially tested with customers in the wild using the tech across different social platforms.

It’s hoping to get there this year, though, and intends to offer an API for platforms and publishers towards the end of this year. The AI classifier is intended to run in near real-time on a social network or other content platform, identifying BS.

Fabula envisages its own role, as the company behind the tech, as that of an open, decentralised “truth-risk scoring platform” — akin to a credit referencing agency just related to content, not cash.

Scoring comes into it because the AI generates a score for classifying content based on how confident it is it’s looking at a piece of fake vs true news.

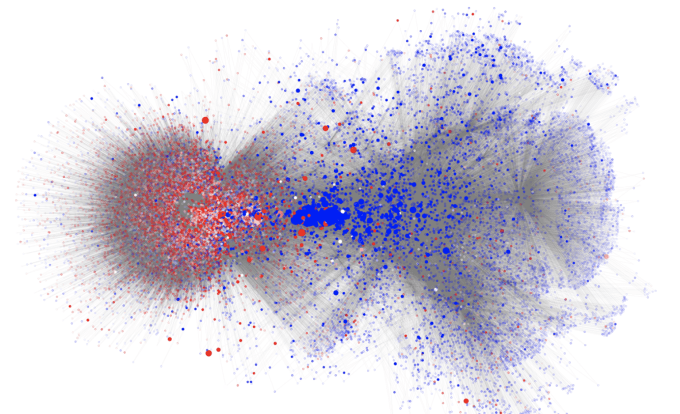

A visualisation of a fake vs real news distribution pattern; users who predominantly share fake news are coloured red and users who don’t share fake news at all are coloured blue — which Fabula says shows the clear separation into distinct groups, and “the immediately recognisable difference in spread pattern of dissemination”.

In its own tests Fabula says its algorithms were able to identify 93 percent of “fake news” within hours of dissemination — which Bronstein claims is “significantly higher” than any other published method for detecting ‘fake news’. (Their accuracy figure uses a standard aggregate measurement of machine learning classification model performance, called ROC AUC.)

The dataset the team used to train their model is a subset of Twitter’s network — comprised of around 250,000 users and containing around 2.5 million “edges” (aka social connections).

For their training dataset Fabula relied on true/fake labels attached to news stories by third party fact checking NGOs, including Snopes and PolitiFact. And, overall, pulling together the dataset was a process of “many months”, according to Bronstein, He also says that around a thousand different stories were used to train the model, adding that the team is confident the approach works on small social networks, as well as Facebook-sized mega-nets.

Asked whether he’s sure the model hasn’t been trained to identified patterns caused by bot-based junk news spreaders, he says the training dataset included some registered (and thus verified ‘true’) users.

“There is multiple research that shows that bots didn’t play a significant amount [of a role in spreading fake news] because the amount of it was just a few percent. And bots can be quite easily detected,” he also suggests, adding: “Usually it’s based on some connectivity analysis or content analysis. With our methods we can also detect bots easily.”

To further check the model, the team tested its performance over time by training it on historical data and then using a different split of test data.

“While we see some drop in performance it is not dramatic. So the model ages well, basically. Up to something like a year the model can still be applied without any re-training,” he notes, while also saying that, when applied in practice, the model would be continually updated as it keeps digesting (ingesting?) new stories and social media content.

Somewhat terrifyingly, the model could also be used to predict virality, according to Bronstein — raising the dystopian prospect of the API being used for the opposite purpose to that which it’s intended: i.e. maliciously, by fake news purveyors, to further amp up their (anti)social spread.

“Potentially putting it into evil hands it might do harm,” Bronstein concedes. Though he takes a philosophical view on the hyper-powerful double-edged sword of AI technology, arguing such technologies will create an imperative for a rethinking of the news ecosystem by all stakeholders, as well as encouraging emphasis on user education and teaching critical thinking.

Let’s certainly hope so. And, on the educational front, Fabula is hoping its technology can play an important role — by spotlighting network-based cause and effect.

“People now like or retweet or basically spread information without thinking too much or the potential harm or damage they’re doing to everyone,” says Bronstein, pointing again to the infectious diseases analogy. “It’s like not vaccinating yourself or your children. If you think a little bit about what you’re spreading on a social network you might prevent an epidemic.”

So, tl;dr, think before you RT.

Returning to the accuracy rate of Fabula’s model, while ~93 per cent might sound pretty impressive, if it were applied to content on a massive social network like Facebook — which has some 2.3BN+ users, uploading what could be trillions of pieces of content daily — even a seven percent failure rate would still make for an awful lot of fakes slipping undetected through the AI’s net.

But Bronstein says the technology does not have to be used as a standalone moderation system. Rather he suggests it could be used in conjunction with other approaches such as content analysis, and thus function as another string on a wider ‘BS detector’s bow.

It could also, he suggests, further aid human content reviewers — to point them to potentially problematic content more quickly.

Depending on how the technology gets used he says it could do away with the need for independent third party fact-checking organizations altogether because the deep learning system can be adapted to different use cases.

Example use-cases he mentions include an entirely automated filter (i.e. with no human reviewer in the loop); or to power a content credibility ranking system that can down-weight dubious stories or even block them entirely; or for intermediate content screening to flag potential fake news for human attention.

Each of those scenarios would likely entail a different truth-risk confidence score. Though most — if not all — would still require some human back-up. If only to manage overarching ethical and legal considerations related to largely automated decisions. (Europe’s GDPR framework has some requirements on that front, for example.)

Facebook’s grave failures around moderating hate speech in Myanmar — which led to its own platform becoming a megaphone for terrible ethnical violence — were very clearly exacerbated by the fact it did not have enough reviewers who were able to understand (the many) local languages and dialects spoken in the country.

So if Fabula’s language-agnostic propagation and user focused approach proves to be as culturally universal as its makers hope, it might be able to raise flags faster than human brains which lack the necessary language skills and local knowledge to intelligently parse context.

“Of course we can incorporate content features but we don’t have to — we don’t want to,” says Bronstein. “The method can be made language independent. So it doesn’t matter whether the news are written in French, in English, in Italian. It is based on the way the news propagates on the network.”

Although he also concedes: “We have not done any geographic, localized studies.”

“Most of the news that we take are from PolitiFact so they somehow regard mainly the American political life but the Twitter users are global. So not all of them, for example, tweet in English. So we don’t yet take into account tweet content itself or their comments in the tweet — we are looking at the propagation features and the user features,” he continues.

“These will be obviously next steps but we hypothesis that it’s less language dependent. It might be somehow geographically varied. But these will be already second order details that might make the model more accurate. But, overall, currently we are not using any location-specific or geographic targeting for the model.

“But it will be an interesting thing to explore. So this is one of the things we’ll be looking into in the future.”

Fabula’s approach being tied to the spread (and the spreaders) of fake news certainly means there’s a raft of associated ethical considerations that any platform making use of its technology would need to be hyper sensitive to.

For instance, if platforms could suddenly identify and label a sub-set of users as ‘junk spreaders’ the next obvious question is how will they treat such people?

Would they penalize them with limits — or even a total block — on their power to socially share on the platform? And would that be ethical or fair given that not every sharer of fake news is maliciously intending to spread lies?

What if it turns out there’s a link between — let’s say — a lack of education and propensity to spread disinformation? As there can be a link between poverty and education… What then? Aren’t your savvy algorithmic content downweights risking exacerbating existing unfair societal divisions?

Bronstein agrees there are major ethical questions ahead when it comes to how a ‘fake news’ classifier gets used.

“Imagine that we find a strong correlation between the political affiliation of a user and this ‘credibility’ score. So for example we can tell with hyper-ability that if someone is a Trump supporter then he or she will be mainly spreading fake news. Of course such an algorithm would provide great accuracy but at least ethically it might be wrong,” he says when we ask about ethics.

He confirms Fabula is not using any kind of political affiliation information in its model at this point — but it’s all too easy to imagine this sort of classifier being used to surface (and even exploit) such links.

“What is very important in these problems is not only to be right — so it’s great of course that we’re able to quantify fake news with this accuracy of ~90 percent — but it must also be for the right reasons,” he adds.

The London-based startup was founded in April last year, though the academic research underpinning the algorithms has been in train for the past four years, according to Bronstein.

The patent for their method was filed in early 2016 and granted last July.

They’ve been funded by $500,000 in angel funding and about another $500,000 in total of European Research Council grants plus academic grants from tech giants Amazon, Google and Facebook, awarded via open research competition awards.

(Bronstein confirms the three companies have no active involvement in the business. Though doubtless Fabula is hoping to turn them into customers for its API down the line. But he says he can’t discuss any potential discussions it might be having with the platforms about using its tech.)

Focusing on spotting patterns in how content spreads as a detection mechanism does have one major and obvious drawback — in that it only works after the fact of (some) fake content spread. So this approach could never entirely stop disinformation in its tracks.

Though Fabula claims detection is possible within a relatively short time frame — of between two and 20 hours after content has been seeded onto a network.

“What we show is that this spread can be very short,” he says. “We looked at up to 24 hours and we’ve seen that just in a few hours… we can already make an accurate prediction. Basically it increases and slowly saturates. Let’s say after four or five hours we’re already about 90 per cent.”

“We never worked with anything that was lower than hours but we could look,” he continues. “It really depends on the news. Some news does not spread that fast. Even the most groundbreaking news do not spread extremely fast. If you look at the percentage of the spread of the news in the first hours you get maybe just a small fraction. The spreading is usually triggered by some important nodes in the social network. Users with many followers, tweeting or retweeting. So there are some key bottlenecks in the network that make something viral or not.”

A network-based approach to content moderation could also serve to further enhance the power and dominance of already hugely powerful content platforms — by making the networks themselves core to social media regulation, i.e. if pattern-spotting algorithms rely on key network components (such as graph structure) to function.

So you can certainly see why — even above a pressing business need — tech giants are at least interested in backing the academic research. Especially with politicians increasingly calling for online content platforms to be regulated like publishers.

At the same time, there are — what look like — some big potential positives to analyzing spread, rather than content, for content moderation purposes.

As noted above, the approach doesn’t require training the algorithms on different languages and (seemingly) cultural contexts — setting it apart from content-based disinformation detection systems. So if it proves as robust as claimed it should be more scalable.

Though, as Bronstein notes, the team have mostly used U.S. political news for training their initial classifier. So some cultural variations in how people spread and react to nonsense online at least remains a possibility.

A more certain challenge is “interpretability” — aka explaining what underlies the patterns the deep learning technology has identified via the spread of fake news.

While algorithmic accountability is very often a challenge for AI technologies, Bronstein admits it’s “more complicated” for geometric deep learning.

“We can potentially identify some features that are the most characteristic of fake vs true news,” he suggests when asked whether some sort of ‘formula’ of fake news can be traced via the data, noting that while they haven’t yet tried to do this they did observe “some polarization”.

“There are basically two communities in the social network that communicate mainly within the community and rarely across the communities,” he says. “Basically it is less likely that somebody who tweets a fake story will be retweeted by somebody who mostly tweets real stories. There is a manifestation of this polarization. It might be related to these theories of echo chambers and various biases that exist. Again we didn’t dive into trying to explain it from a sociological point of view — but we observed it.”

So while, in recent years, there have been some academic efforts to debunk the notion that social media users are stuck inside filter bubble bouncing their own opinions back at them, Fabula’s analysis of the landscape of social media opinions suggests they do exist — albeit, just not encasing every Internet user.

Bronstein says the next steps for the startup is to scale its prototype to be able to deal with multiple requests so it can get the API to market in 2019 — and start charging publishers for a truth-risk/reliability score for each piece of content they host.

“We’ll probably be providing some restricted access maybe with some commercial partners to test the API but eventually we would like to make it useable by multiple people from different businesses,” says requests. “Potentially also private users — journalists or social media platforms or advertisers. Basically we want to be… a clearing house for news.”

Read Full Article

Read Full Article