A blog about how-to, internet, social-networks, windows, linux, blogging, tips and tricks.

09 July 2018

Dish Hopper devices get Google Assistant functionality

After promising up the feature for the better part of a year, Dish’s Hopper line just got Google Assistant functionality. The feature brings hands-free control to the receivers, allowing for the standard array of functionality like play, pause, fast forward and rewind, along with content search.

Here are a handful of examples from Google,

- “Turn on my Hopper”

- “Tune to channel 140”

- “Show me home improvement shows”

- “Open Game Finder on Hopper”

- “Rewind 30 seconds” “Pause” and “Resume”

- “Record Game of Thrones on Hopper”

You get the idea.

Dish announced the feature back at CES — I’m not sure eight months qualifies as “soon” in terms of software updates, but there you go. The feature works with Hoppers that are paired with Assistant-enabled devices like Google Home smart speakers and Android handsets. Apparently the company rolled the feature out to about one-percent of users last week as a kind of large-scale test.

Dish has supported Alexa for some time, on the other hand. Last May, the company brought the hands-free skill to Hopper, and added support for its Joey receivers back in October. Amazon’s assistant also added DVR recording capability for Dish, TiVo, DIRECTV and Verizon this March.

Read Full Article

Snapchat code reveals team-up with Amazon for “Camera Search”

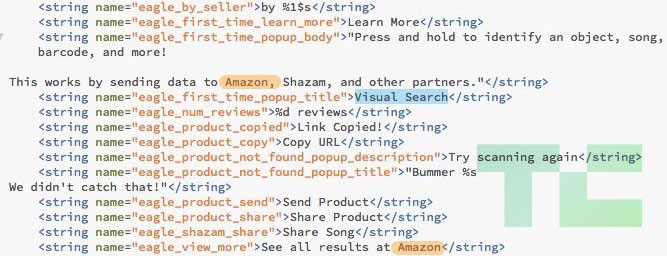

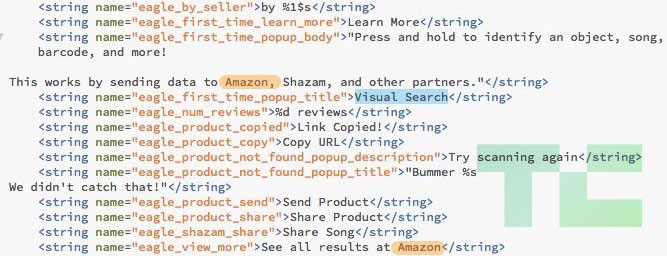

Codenamed “Eagle”, Snapchat is building a visual product search feature that deliver users to Amazon’s listings. Buried inside the code of Snapchat’s Android app is an unreleased “Visual Search” feature where you “Press and hold to identify an object, song, barcode, and more! This works by sending data to Amazon, Shazam, and other partners.” Once an object or barcode has been scanned you can “See all results at Amazon”.

Visual product search could make Snapchat’s camera a more general purpose tool for seeing and navigating the world, rather than just a social media maker. It could differentiate Snapchat from Instagram, whose clone of Snapchat Stories now has over twice the users and a six times faster growth rate than the original. And if Snapchat has worked out an affiliate referrals deal with Amazon, it could open a new revenue stream. That’s something Snap Inc direly needs after posting a $385 million loss last quarter and missing revenue estimates by $14 million.

TechCrunch was tipped off to the hidden Snapchat code by app researcher Ishan Agarwal. His tips have previously led to TechCrunch scoops about Instagram’s video calling, soundtracks, Focus portrait mode, and QR Nametags features that were all later officially launched. Amazon didn’t respond to a press inquiry before publishing time. Snap Inc gave TechCrunch a “no comment”, but the company’s code tells the story.

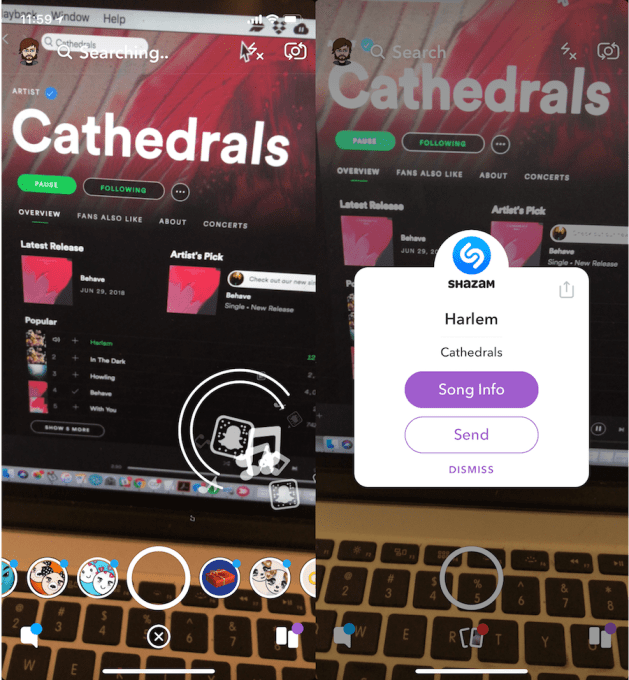

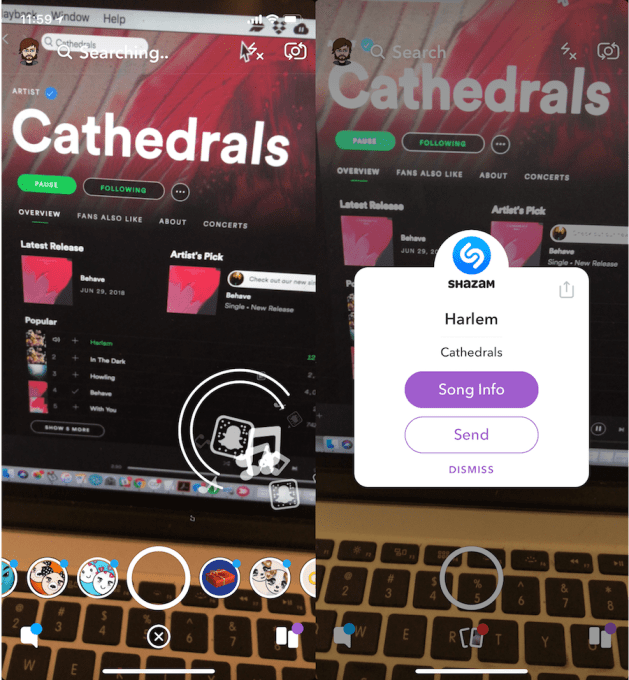

Snapchat first dabbled in understanding the world around you with its Shazam integration back in 2016 that lets you tap and hold to identify a song playing nearby, check it out on Shazam, send it to a friend, or follow the artist on Snapchat. Project Eagle builds on this audio search feature to offer visual search through a similar interface and set of partnerships. The ability to identify purchaseable objects or scan barcodes could turn Snapchat, which some view as a teen toy, into more of a utility.

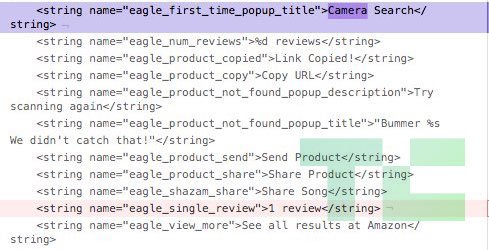

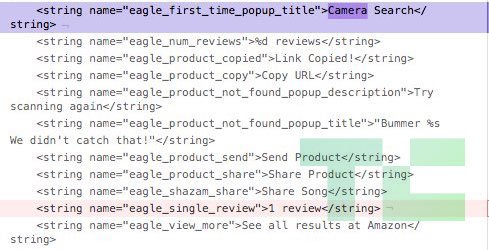

What’s Inside Snapchat’s Eagle Eye

Snapchat’s code doesn’t explain exactly how the Project Eagle feature will work, but in the newest version of Snapchat it was renamed as “Camera Search”. The code lists the ability to surface “sellers”, and “reviews”, “Copy URL” of a product, and “Share” or “Send Product” to friends — likely via Snap messages or Snapchat Stories. In characteristic cool kid teenspeak, an error message for “product not found” reads “Bummer, we didn’t catch that!”

Eagle’s visual search may be connected to Snapchat’s “context cards” which debuted late last year and pull up business contact info, restaurant reservations, movie tickets, Ubers or Lyfts, and more. Surfacing a context card within Snapchat of details about ownable objects might be the first step to getting users to buy them…and advertisers to pay Snap to promote them. It’s easy to imagine context cards being accessible for products tagged in Snap Ads as well as scanned through visual search. And Snap already has in-app shopping.

Being able to recognize what you’re seeing makes Snapchat more fun, but it’s also a new way of navigating reality. In mid-2017 Snapchat launched World Lenses that map the surfaces of your surroundings so you can place 3D animated objects like its Dancing Hotdog mascot alongside real people in real places. Snapchat also released a machine vision-powered search feature last year that compiles Stories of user submitted Snaps featuring your chosen keyword, like videos with “puppies” or “fireworks” even if the captions don’t mention them.

Snapchat was so interested in visual search that this year, it reportedly held early stage acquisition talks with machine vision startup Blippar. The talks fell through with the UK augmented reality company that has raised at least $99 million for its own visual search feature, but which recently began to implode due to low usage and financing trouble. Snap Inc might have been hoping to jumpstart its Camera Search efforts.

Snap calls itself a Camera Company, after all. But with the weak sales of its mediocre v1 Spectacles, the well-reviewed v2 failing to break into the cultural zeitgeist, and no other hardware products on the market, Snap may need to redefine what exactly that tag line means. Visual Search could frame Snapchat as more of a sensor than just a camera. With its popular use for rapid-fire selfie messaging, it’s already the lens through which some teens see the world. Soon, Snap could be ready to train its eagle eye on purchases, not just faces.

In related Snapchat news:

Read Full Article

How to Remove the Vocals From Any Song Using Audacity

Do you want to create an instrumental version of your favorite song? Perhaps you need to make a backing track? Or do you have a song you produced but don’t have the original tracks for, and need to make a change to the vocal track?

Whatever the case, you can remove the vocals from any song with Audacity, a free and open source digital audio workstation. And in this article we’ll detail how to achieve this.

What to Know Before Removing Vocals From Songs

Before we proceed, let’s get a few things out of the way.

If you’re going to strip vocals from songs you don’t own, then that needs to be for personal use only. Using a self-made voice-free version of a track (e.g. as a backing track for a live performance) wouldn’t be appropriate.

On the other hand, you might have some self-made audio that you need to strip voice from in order to create some ambience. Or you may have some original music that you’ve lost the original recordings for, and need to remove the vocals.

With Audacity, you have a couple of different ways to remove vocal tracks from a song, depending on how the vocals are placed in the mix:

- Vocals in the middle: Most songs are mixed in this way, with the voice in the center, or just slightly to the left or right, with instruments around them, creating the stereo effect.

- Vocals in one channel: Typically songs from the 1960s use this approach, when stereophonic sound was still being explored in the studio.

We’ll look at applying each of these options below. Don’t forget to head to the Audacity website to download and install your own copy of Audacity (for Windows, Mac, or Linux) before proceeding. If you have a copy already, make sure you’re using the feature-packed Audacity 2.2.0 (or later).

Vocal removal in Audacity is possible thanks to a built-in function, making it just as simple as removing background noise from an audio track.

Note: In addition to these native options, you can also use these third-party voice removal plugins to remove vocals from a track. Each has its own instructions for applying the filter to remove vocals.

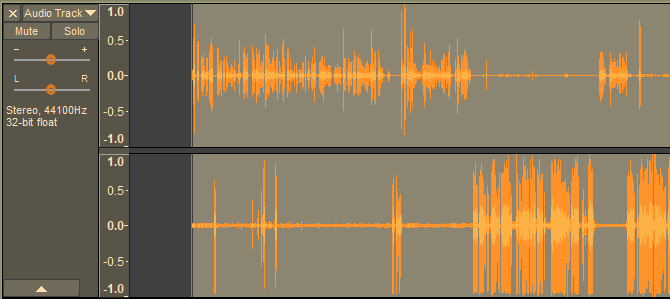

Stereo Track Vocal Removal With Audacity

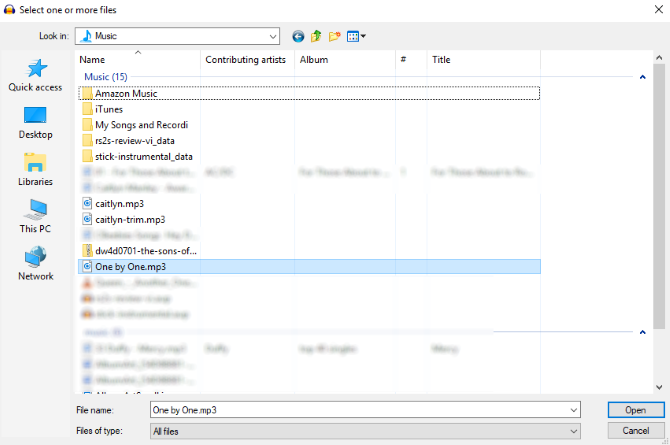

Begin by opening the audio file you wish to edit (File > Open).

Once loaded, play the track; make sure you can identify the areas where vocals appear. It’s a good idea to have some familiarity with the track before proceeding.

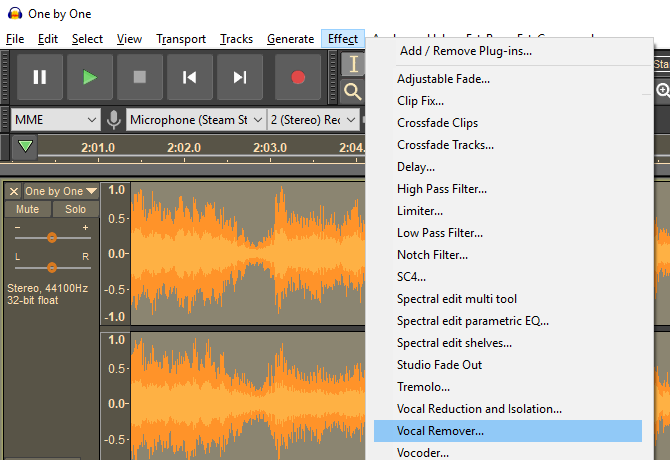

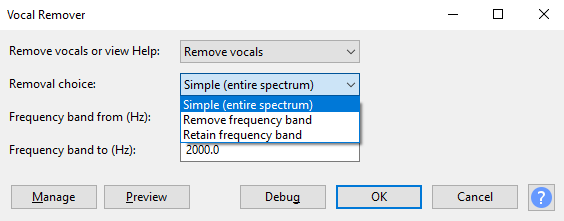

Next, select the track (click the header on the left, or press Ctrl + A) and choose Effects > Vocal Remover. You have three options for removal: Simple, Remove frequency band, and Retain frequency band. Start with Simple, and use the Preview button to check how this might be applied.

If you’re satisfied, click OK to proceed; otherwise, try the other options, previewing the track until you’re happy with the expected results. Note that if you accidentally apply vocal removal with the wrong settings, you can undo it with Ctrl + Z or Edit > Undo.

When you’re done, use the File > Save Project option to retain the changes. To create a new MP3 file, use File > Save other > Export as MP3.

Note that you’ll probably never achieve a perfect vocal-free track. You’ll need to accept a trade-off between some vocal artefacts and a lower quality, muddier instrumental track.

Removing Vocals From Stereo/Mono Dual Track

If the mix has the vocals on one channel, it’s far easier to strip them away.

Begin by finding the Audio Track dropdown menu on the track header, and selecting Split stereo track. This will create a second track for your audio. Play the audio, using the Mute button on each track to determine which track is carrying the vocals.

Once you’ve worked it out, all you need to do next is delete the channel with the vocals on. Click X on the track header to do this. Again, listen back to the track to check the results are to your approval.

While a simpler and more effective option than using the Vocal Remover tool, this method can only be used on comparatively few songs.

Need Vocal-Free Music? Try These Options

Using Audacity (or an Audacity alternative) isn’t the only way to strip vocals from audio. It’s a good DIY option, but if you’re dissatisfied with the results, you may be end up frustrated.

The good news is, it isn’t uncommon for songs to be released with accompanying instrumental tracks. Such tracks are often found on special edition albums. Some movie soundtracks might also feature instrumentals of featured songs.

You might also consider the easier option of getting hold of some karaoke tracks. Many of these can be found on YouTube, although there are various other places where you can get hold of them. Karaoke tracks are still sold on CD too, so don’t overlook this old-school option.

More Options That Aren’t Audacity

By now you should have successfully removed the vocal track from your song of choice. The results may be good or not so good, but either way the vocals will be gone.

Alternatively, you might have preferred to track down a karaoke CD with the same song included, vocals already removed. Or you’ve found an instrumental version of the song in question.

However, if you’ve done it yourself using Audacity, just consider how powerful this free piece of software is. In short, it’s as versatile as the various paid alternatives, such as Adobe Audition. Audacity does far more than vocal removal, too.

We’ve previously looked at how to use Audacity, which can be used for editing podcasts, converting vinyl albums to MP3, and creating sound effects.

Read the full article: How to Remove the Vocals From Any Song Using Audacity

Read Full Article

Snapchat code reveals team-up with Amazon for “Camera Search”

Codenamed “Eagle”, Snapchat is building a visual product search feature that deliver users to Amazon’s listings. Buried inside the code of Snapchat’s Android app is an unreleased “Visual Search” feature where you “Press and hold to identify an object, song, barcode, and more! This works by sending data to Amazon, Shazam, and other partners.” Once an object or barcode has been scanned you can “See all results at Amazon”.

Visual product search could make Snapchat’s camera a more general purpose tool for seeing and navigating the world, rather than just a social media maker. It could differentiate Snapchat from Instagram, whose clone of Snapchat Stories now has over twice the users and a six times faster growth rate than the original. And if Snapchat has worked out an affiliate referrals deal with Amazon, it could open a new revenue stream. That’s something Snap Inc direly needs after posting a $385 million loss last quarter and missing revenue estimates by $14 million.

TechCrunch was tipped off to the hidden Snapchat code by app researcher Ishan Agarwal. His tips have previously led to TechCrunch scoops about Instagram’s video calling, soundtracks, Focus portrait mode, and QR Nametags features that were all later officially launched. Amazon didn’t respond to a press inquiry before publishing time. Snap Inc gave TechCrunch a “no comment”, but the company’s code tells the story.

Snapchat first dabbled in understanding the world around you with its Shazam integration back in 2016 that lets you tap and hold to identify a song playing nearby, check it out on Shazam, send it to a friend, or follow the artist on Snapchat. Project Eagle builds on this audio search feature to offer visual search through a similar interface and set of partnerships. The ability to identify purchaseable objects or scan barcodes could turn Snapchat, which some view as a teen toy, into more of a utility.

What’s Inside Snapchat’s Eagle Eye

Snapchat’s code doesn’t explain exactly how the Project Eagle feature will work, but in the newest version of Snapchat it was renamed as “Camera Search”. The code lists the ability to surface “sellers”, and “reviews”, “Copy URL” of a product, and “Share” or “Send Product” to friends — likely via Snap messages or Snapchat Stories. In characteristic cool kid teenspeak, an error message for “product not found” reads “Bummer, we didn’t catch that!”

Eagle’s visual search may be connected to Snapchat’s “context cards” which debuted late last year and pull up business contact info, restaurant reservations, movie tickets, Ubers or Lyfts, and more. Surfacing a context card within Snapchat of details about ownable objects might be the first step to getting users to buy them…and advertisers to pay Snap to promote them. It’s easy to imagine context cards being accessible for products tagged in Snap Ads as well as scanned through visual search. And Snap already has in-app shopping.

Being able to recognize what you’re seeing makes Snapchat more fun, but it’s also a new way of navigating reality. In mid-2017 Snapchat launched World Lenses that map the surfaces of your surroundings so you can place 3D animated objects like its Dancing Hotdog mascot alongside real people in real places. Snapchat also released a machine vision-powered search feature last year that compiles Stories of user submitted Snaps featuring your chosen keyword, like videos with “puppies” or “fireworks” even if the captions don’t mention them.

Snapchat was so interested in visual search that this year, it reportedly held early stage acquisition talks with machine vision startup Blippar. The talks fell through with the UK augmented reality company that has raised at least $99 million for its own visual search feature, but which recently began to implode due to low usage and financing trouble. Snap Inc might have been hoping to jumpstart its Camera Search efforts.

Snap calls itself a Camera Company, after all. But with the weak sales of its mediocre v1 Spectacles, the well-reviewed v2 failing to break into the cultural zeitgeist, and no other hardware products on the market, Snap may need to redefine what exactly that tag line means. Visual Search could frame Snapchat as more of a sensor than just a camera. With its popular use for rapid-fire selfie messaging, it’s already the lens through which some teens see the world. Soon, Snap could be ready to train its eagle eye on purchases, not just faces.

In related Snapchat news:

Read Full Article

BigClapper does away with tedious clapping, happiness

“If you want a picture of the future, imagine a robot clapping over a human face, forever,” wrote George Orwell and his dire prediction has finally come to pass. This product – a year old device that I have never seen before but now love – is called BigClapper and it is basically an orb with a funny face and big white hands. When you set it up in a location it will yell and clap endlessly, a sort of robotic tummler that can pick up your spirits while it drains your will to live.

The product, found by RobotStart, is wildly manic. BigClapper can be used at offices! In front of stores! At parties! It can clap as people walk by, encouraging them to come into your shop! It is red! It has hands!

While it’s not yet Alexa compatible, the BigClapper looks to be a model of future human-computer interaction. After all what is more pure than a big red face howling at you on the street while it claps maniacally in an effort to sell you more products. It’s a literal symbol of true capitalism in this modern era.

I, for one, welcome our clapping robot overlords.

Read Full Article

Digging deeper into smart speakers reveals two clear paths

In a truly fascinating exploration into two smart speakers – the Sonos One and the Amazon Echo – BoltVC’s Ben Einstein has found some interesting differences in the way a traditional speaker company and an infrastructure juggernaut look at their flagship devices.

The post is well worth a a full read but the gist is this: Sonos, a very traditional speaker company, has produced a good speaker and modified its current hardware to support smart home features like Alexa and Google Assistant. The Sonos One, notes Einstein, is a speaker first and smart hardware second.

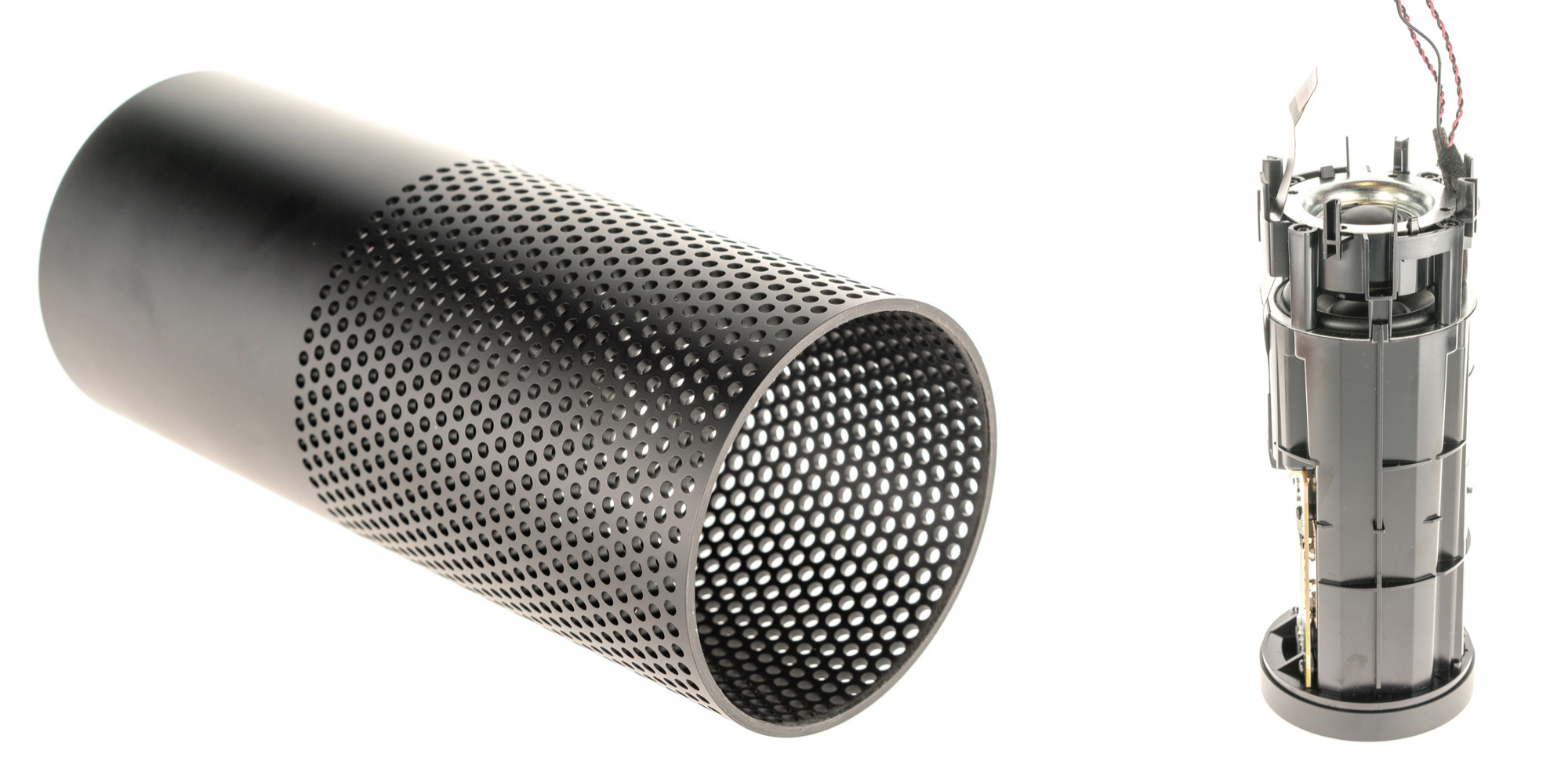

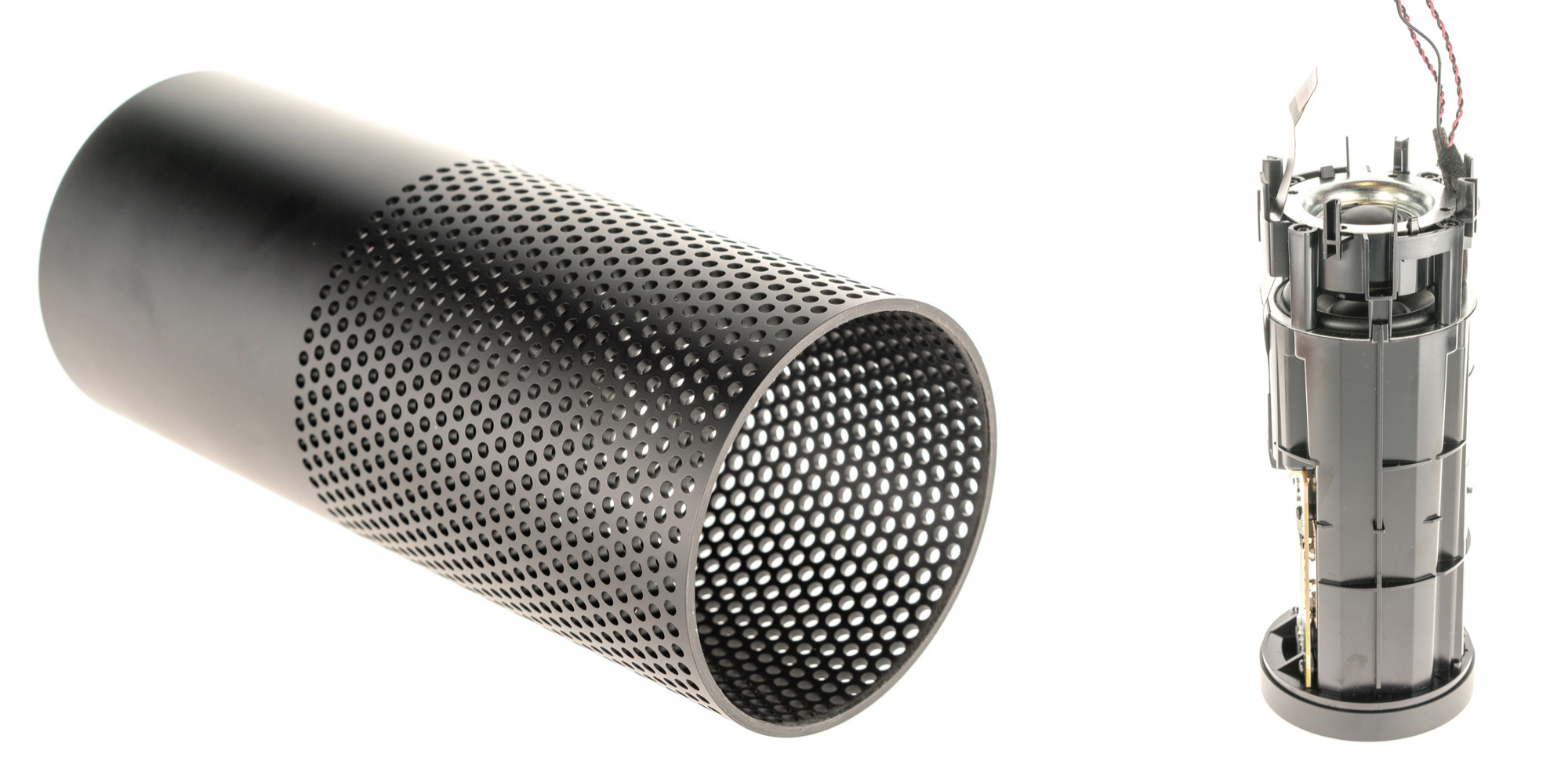

“Digging a bit deeper, we see traditional design and manufacturing processes for pretty much everything. As an example, the speaker grill is a flat sheet of steel that’s stamped, rolled into a rounded square, welded, seams ground smooth, and then powder coated black. While the part does look nice, there’s no innovation going on here,” he writes.

The Amazon Echo, on the other hand, looks like what would happen if an engineer was given an unlimited budget and told to build something that people could talk to. The design decisions are odd and intriguing and it is ultimately less a speaker than a home conversation machine. Plus it is very expensive to make.

Pulling off the sleek speaker grille, there’s a shocking secret here: this is an extruded plastic tube with a secondary rotational drilling operation. In my many years of tearing apart consumer electronics products, I’ve never seen a high-volume plastic part with this kind of process. After some quick math on the production timelines, my guess is there’s a multi-headed drill and a rotational axis to create all those holes. CNC drilling each hole individually would take an extremely long time. If anyone has more insight into how a part like this is made, I’d love to see it! Bottom line: this is another surprisingly expensive part.

Sonos, which has been making a form of smart speaker for fifteen years, is a CE company with cachet. Amazon, on the other hand, sees its devices as a way into living rooms and a delivery system for sales and is fine with licensing its tech before making its own. Therefore to compare the two is a bit disingenuous. Einstein’s thesis that Sonos’ trajectory is troubled by the fact that it depends on linear and closed manufacturing techniques while Amazon spares no expense to make its products is true. But Sonos makes speakers that work together amazingly well. They’ve done this for a decade and a half. If you compare their products – and I have – with competing smart speakers an non-audiophile “dumb” speakers you will find their UI, UX, and sound quality surpass most comers.

Amazon makes things to communicate with Amazon. This is a big difference.

Where Einstein is correct, however, is in his belief that Sonos is at a definite disadvantage. Sonos chases smart technology while Amazon and Google (and Apple, if their HomePod is any indication) lead. That said, there is some value to having a fully-connected set of speakers with add-on smart features vs. having to build an entire ecosystem of speaker products that can take on every aspect of the home theatre.

On the flip side Amazon, Apple, and Google are chasing audio quality while Sonos leads. While we can say that in the future we’ll all be fine with tinny round speakers bleating out Spotify in various corners of our room, there is something to be said for a good set of woofers. Whether this nostalgic love of good sound survives this generation’s tendency to watch and listen to low resolution media is anyone’s bet, but that’s Amazon’s bet to lose.

Ultimately Sonos is strong and fascinating company. An upstart that survived the great CE destruction wrought by Kickstarter and Amazon, it produces some of the best mid-range speakers I’ve used. Amazon makes a nice – almost alien – product, but given that it can be easily copied and stuffed into a hockey puck that probably costs less than the entire bill of materials for the Amazon Echo it’s clear that Amazon’s goal isn’t to make speakers.

Whether the coming Sonos IPO will be successful depends partially on Amazon and Google playing ball with the speaker maker. The rest depends on the quality of product and the dedication of Sonos users. This good will isn’t as valuable as a signed contract with major infrastructure players but Sonos’ good will is far more than Amazon and Google have with their popular but potentially intrusive product lines. Sonos lives in the home while Google and Amazon want to invade it. That is where Sonos wins.

Read Full Article

Digging deeper into smart speakers reveals two clear paths

In a truly fascinating exploration into two smart speakers – the Sonos One and the Amazon Echo – BoltVC’s Ben Einstein has found some interesting differences in the way a traditional speaker company and an infrastructure juggernaut look at their flagship devices.

The post is well worth a a full read but the gist is this: Sonos, a very traditional speaker company, has produced a good speaker and modified its current hardware to support smart home features like Alexa and Google Assistant. The Sonos One, notes Einstein, is a speaker first and smart hardware second.

“Digging a bit deeper, we see traditional design and manufacturing processes for pretty much everything. As an example, the speaker grill is a flat sheet of steel that’s stamped, rolled into a rounded square, welded, seams ground smooth, and then powder coated black. While the part does look nice, there’s no innovation going on here,” he writes.

The Amazon Echo, on the other hand, looks like what would happen if an engineer was given an unlimited budget and told to build something that people could talk to. The design decisions are odd and intriguing and it is ultimately less a speaker than a home conversation machine. Plus it is very expensive to make.

Pulling off the sleek speaker grille, there’s a shocking secret here: this is an extruded plastic tube with a secondary rotational drilling operation. In my many years of tearing apart consumer electronics products, I’ve never seen a high-volume plastic part with this kind of process. After some quick math on the production timelines, my guess is there’s a multi-headed drill and a rotational axis to create all those holes. CNC drilling each hole individually would take an extremely long time. If anyone has more insight into how a part like this is made, I’d love to see it! Bottom line: this is another surprisingly expensive part.

Sonos, which has been making a form of smart speaker for fifteen years, is a CE company with cachet. Amazon, on the other hand, sees its devices as a way into living rooms and a delivery system for sales and is fine with licensing its tech before making its own. Therefore to compare the two is a bit disingenuous. Einstein’s thesis that Sonos’ trajectory is troubled by the fact that it depends on linear and closed manufacturing techniques while Amazon spares no expense to make its products is true. But Sonos makes speakers that work together amazingly well. They’ve done this for a decade and a half. If you compare their products – and I have – with competing smart speakers an non-audiophile “dumb” speakers you will find their UI, UX, and sound quality surpass most comers.

Amazon makes things to communicate with Amazon. This is a big difference.

Where Einstein is correct, however, is in his belief that Sonos is at a definite disadvantage. Sonos chases smart technology while Amazon and Google (and Apple, if their HomePod is any indication) lead. That said, there is some value to having a fully-connected set of speakers with add-on smart features vs. having to build an entire ecosystem of speaker products that can take on every aspect of the home theatre.

On the flip side Amazon, Apple, and Google are chasing audio quality while Sonos leads. While we can say that in the future we’ll all be fine with tinny round speakers bleating out Spotify in various corners of our room, there is something to be said for a good set of woofers. Whether this nostalgic love of good sound survives this generation’s tendency to watch and listen to low resolution media is anyone’s bet, but that’s Amazon’s bet to lose.

Ultimately Sonos is strong and fascinating company. An upstart that survived the great CE destruction wrought by Kickstarter and Amazon, it produces some of the best mid-range speakers I’ve used. Amazon makes a nice – almost alien – product, but given that it can be easily copied and stuffed into a hockey puck that probably costs less than the entire bill of materials for the Amazon Echo it’s clear that Amazon’s goal isn’t to make speakers.

Whether the coming Sonos IPO will be successful depends partially on Amazon and Google playing ball with the speaker maker. The rest depends on the quality of product and the dedication of Sonos users. This good will isn’t as valuable as a signed contract with major infrastructure players but Sonos’ good will is far more than Amazon and Google have with their popular but potentially intrusive product lines. Sonos lives in the home while Google and Amazon want to invade it. That is where Sonos wins.

Read Full Article

What Is Mylobot Malware? How It Works and What to Do About It

Cybersecurity is a constant battleground. In 2017, security researchers discovered some 23,000 new malware specimens per day (that’s 795 per hour).

While that headline is shocking, it turns out that the majority of these specimens are variants of the same malware type. They just have slightly different code that each creates a “new” signature.

Every now and then, though, a truly new malware strain bursts onto the scene. Mylobot is one such example: it’s new, highly sophisticated, and gathering momentum.

What Is Mylobot?

Mylobot is a botnet malware that packs a serious amount of malicious intent. The new malware was first spotted by Tom Nipravsky, a security researcher for Deep Instinct, who says “the combination and complexity of these techniques were never seen in the wild before.”

This malware does indeed combine a wide-range of sophisticated infection and obfuscation techniques into a potent package. Take a look:

- Anti-virtual machine (VM) techniques: The malware checks its local environment for the signs of a virtual machine, and if found fails to run.

- Anti-sandbox techniques: Very similar to the anti-VM techniques.

- Anti-debugging techniques: Stops a security researcher effectively and efficiently working on a malware sample, by altering behavior in the presence of certain debugging programs.

- Wrapping internal parts with an encrypted resource file: Essentially further protecting the internal code of the malware with encryption.

- Code injection techniques: Mylobot runs custom code to attack the system, injecting its custom code into system processes to gain access and disrupt regular operation.

- Process hollowing: An attacker creates a new process in a suspended state, then replaces the one that is meant to be hidden.

- Reflective EXE: The EXE file executes from memory rather than disk.

- Delay mechanism: The malware lays dormant for 14 days before connecting to command and control servers.

Mylobot puts a lot of effort into staying hidden.

The anti-sandboxing, anti-debugging, and anti-VM techniques attempt to stop the malware appearing in antimalware scans, as well as prevent researchers from isolating the malware on a virtual machine or sandboxed environment for analysis.

The reflective executable makes Mylobot even more undetectable as there is no direct disk activity for your antivirus or antimalware suite to analyze.

Mylobot’s Evasive Maneuvers

According to what Nipravsky told Threatpost:

“The structure of the code itself is very complex—it’s a multi-threaded malware where each thread is in charge on implementing different capability of the malware.”

And:

“The malware contains three layers of files, nested on each other, where each layer is in charge of executing the next one. The last layer is using [the Reflective EXE] technique.”

Along with the anti-analysis and anti-detection techniques, Mylobot can waits up to 14 days before attempting to establish communications with its command and control servers.

When Mylobot does establish a connection, the botnet shuts down Windows Defender and Windows Update, as well as closing a number of Windows Firewall ports.

Mylobot Seeks and Kills Other Malware Types

One of the most interesting—and rare—functions of the Mylobot malware is its search-and-destroy function.

Unlike other malware, Mylobot comes ready to eradicate other types of malware already on the target system. Mylobot scans the system Application Data folders for common malware files and folders, and if it finds a certain file or process, Mylobot terminates it.

Nipravsky believes there are a couple of reasons for this rare and hyper-aggressive malware activity. The rise of ransomware-as-a-service and other pay-to-play malware variants have significantly lowered the barrier to becoming a cyber-criminal. Some full-featured ransomware and exploit kits are available for free as part of affiliate programs (specifically, the Saturn ransomware).

Furthermore, the price to hire a powerful botnet can drop extremely low with a large enough order while others have advertised day rates for only tens of dollars.

The ease of access is encroaching into established cyber-crime activity.

“Attackers compete against each other to have as many ‘zombie computers’ as possible in order to increase their value when proposing services to other attackers, especially when it comes to spreading infrastructures.”

As a result, there is a sort of dramatic escalation of malware functionality to spread further, last longer, and reap more profitable rewards.

What Does Mylobot Do, Exactly?

Mylobot’s main functionality is exposing control of the system to the attacker. From there, the attacker has access to online credentials, system files, and much more.

The real damage is ultimately the decision of whoever is attacking the system. Malware with capabilities of Mylobot can easily lead to massively damage, especially when found in the enterprise environment.

Mylobot also has links to other botnets, including DorkBot, Ramdo, and the infamous Locky network. If Mylobot is acting as a conduit for other botnets and malware types, anyone who falls foul of this malware is going to have a really bad time:

“The fact that the botnet behaves as a gate for additional payloads, puts the enterprise in risk for leak of sensitive data as well, following the risk of keyloggers / banking trojans installations.”

How Do You Stay Safe Against Mylobot?

Well, here’s the bad news: Mylobot is thought to have been actively infecting systems for over two years at this point. Its command-and-control servers first saw use in November 2015.

So, Mylobot appears to have dodged all other security researchers and firms for quite some time before running into Deep Instinct’s deep learning cyber research tools.

Unfortunately, your regular antivirus and antimalware tools aren’t going to pick something like Mylobot up—for the time being, at least.

Now that there is a Mylobot sample, more security firms and researchers can use the signature. In turn, they’ll keep much closer tabs on Mylobot.

In the meantime, you need to check out our list of the best computer and security antivirus tools! While your regular antivirus or antimalware might not pick up on Mylobot, there’s an awful lot of other malware out there it definitely will stop.

However, if its too late for you and you’re already worried about an infection, check out our complete guide to malware removal. It’ll help you and your system overcome the vast majority of malware, as well as begin to take steps to prevent it from happening again.

Read the full article: What Is Mylobot Malware? How It Works and What to Do About It

Read Full Article

How to Boost Volume on a Chromebook Beyond Max

Is your Chromebook not loud enough? It’s a common problem across many of these laptops, including some of the best Chromebooks out there today like the Asus Flip C302.

Here’s how you can fix the issue by cranking up the volume of a Chromebook beyond its max settings.

How Is Chromebook Volume Boosting Even Possible?

You’re wondering how the volume can go beyond maximum, right? Well, it’s all about software.

Speakers can be much louder than what you hear. But just because they can get louder doesn’t mean they’ll maintain good audio quality. When a company makes a laptop, it tests how loud the speakers can get while still being pitch-perfect, and that volume is set as the maximum.

In this guide, we’ll show you software tools that tell the computer to go beyond that volume. This means you will likely notice a drop in audio quality, usually in the form of distortion and crackling.

Caution: Some speakers can get damaged by cranking them up beyond the max volume. It’s best to use these tools sparingly.

Note: Unfortunately, even if your Chromebook can use Android apps, the best Android equalizer apps won’t have any effect on a Chromebook.

How to Boost Volume in Chrome Browser

On a Chromebook, most of your time is spent in the Chrome browser. If the volume of a YouTube clip or your favorite podcast is too low, there’s an easy extension to boost that, called Ears.

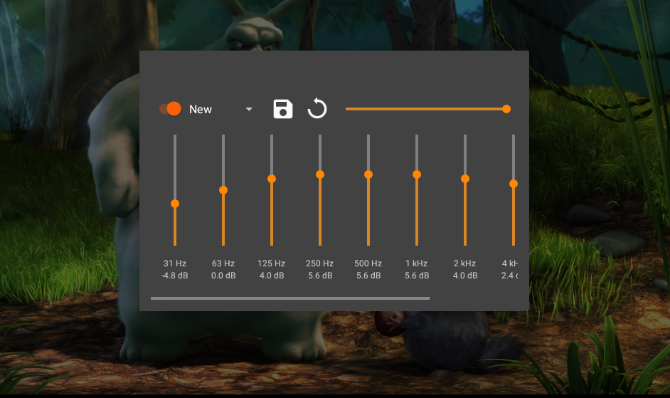

Ears is an equalizer that works with any web page open in Google Chrome. As long as the page is playing audio, Ears can boost the volume. While there are other extensions that do this, I found Ears to be the best because it has a simple volume bar at the left. It will be at your computer’s preset max level when you start. Click and drag it up to boost the volume.

The higher you drag the volume booster, the more distortion you will hear. I’d advise stopping the moment people’s voices start cracking or the bass thump sounds shrill rather than deep. Those are good signs of pushing the speakers beyond what’s healthy for them.

Of course, Ears is also an equalizer app, so you can boost individual frequencies too. If your main issue is with hearing people’s voices, try increasing only the appropriate frequencies. It would generally be at the 80, 160, or 320 marks in the EQ dashboard.

Download: Ears: Bass Boost, EQ Any Audio for Chrome (Free)

How to Boost Volume on Chromebook for Videos

In case you’ve downloaded a movie to watch offline, your volume-boosting options are limited to the apps you use.

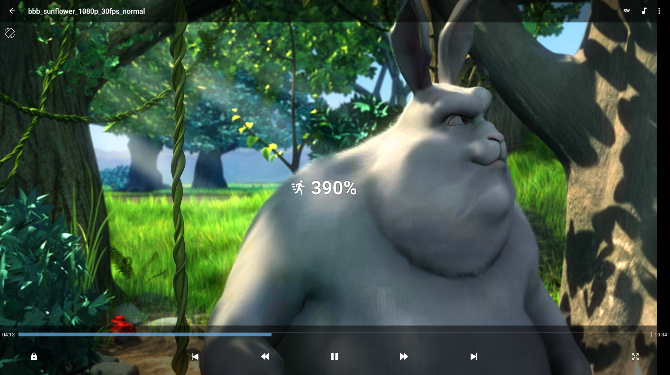

Forget about the default Chrome video player. You’ll need to download Android apps for Chrome. I’ve had the most consistent success with two apps so far: VLC and MX Player.

VLC: Good for Boosting and Equalizing, But Complicated

VLC is one of our old favorites, and it can play any file you throw at it. It’s one of the best desktop apps available for Android (and Chrome). However, the audio boost option is a little hidden.

Here’s how to increase the volume of any file with VLC for Android:

- Once the video is playing, click the screen to bring up the playback bar.

- In the playback bar, click Options (the three-dot icon).

- That opens a small black window overlaid on the video. Click Equalizer, which looks like three lines with some buttons on them.

- Now you are finally in VLC’s equalizer and can boost the volume. The horizontal bar at the top is the volume meter, take it to the right to boost it.

- Like with EQ, you can also use the equalizer instead of overall volume boost. For most movies, try switching to the “Live” preset, it should usually give you a discernible increase without compromising your speakers.

Download: VLC for Android (Free)

MX Player: Good for Easy Boosting

MX Player may be the best all-in-one video player for Android. Its simplicity in boosting volume beyond the max is what most people would look for.

Start any video file in MX Player, and when you want to boost the volume, swipe up and down on the touchscreen, or use two fingers to swipe up and down on the trackpad. MX Player can boost the volume to double of what your current max is, so it’s the easiest way to get the job done.

Note: Volume boost in MX Player is enabled by default. But in case it doesn’t work, go to Menu > Tools > Settings > Audio and make sure Volume Boost is ticked.

Download: MX Player for Android (Free)

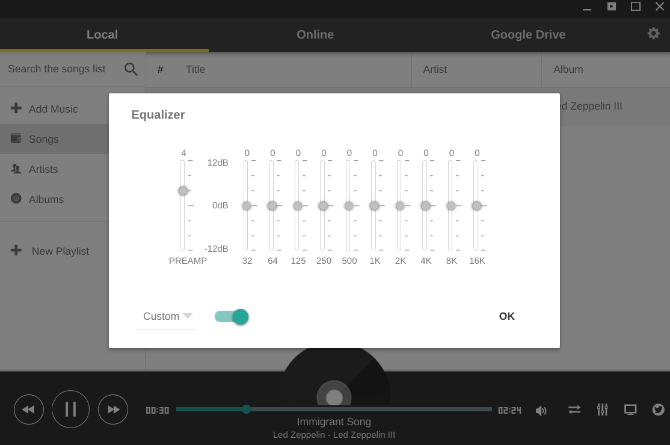

How to Boost Volume on Chromebook for Music

In most cases, you will use an online music streaming service to listen to tunes on a Chromebook. And in that case, you can always boost the volume through the Ears extension mentioned above.

But if you prefer offline music or have no choice (like when you’re on a plane and need to plug in your headphones), there are apps that can help you.

For offline Chromebook desktop users, we recommend downloading Enjoy Music Player for all your music needs. And Enjoy comes with its own equalizer with a built-in volume boost.

- Click the equalizer button.

- Switch it on by clicking the toggle (it should turn blue).

- Click and drag the Preamp button higher to boost the volume.

Download: Enjoy Music Player for Chrome (Free)

Get a Bluetooth Speaker for Your Chromebook

All of these methods will artificially boost your Chromebook’s volume beyond max, but like I said at the start, use these rarely. Overusing that artificial boost can damage your speakers.

If your Chromebook’s volume is really low and you use it often for media, you’re better off grabbing a pair of good and affordable Bluetooth speakers.

Read the full article: How to Boost Volume on a Chromebook Beyond Max

Read Full Article

Apple releases new iPad, FaceID ads

Apple has released a handful of new ads promoting the iPad’s portability and convenience over both laptops and traditional paper solutions. The 15-second ads focus on how the iPad can make even the most tedious things — travel, notes, paperwork, and ‘stuff’ — just a bit easier.

Three out of the four spots show the sixth-generation iPad, which was revealed at Apple’s education event in March, and which offers a lower-cost ($329 in the U.S.) option with Pencil support.

The ads were released on Apple’s international YouTube channels (UAE, Singapore, and United Kingdom).

This follows another 90-second ad released yesterday, focusing on FaceID. The commercial shows a man in a gameshow-type setting asked to remember the banking password he created earlier that morning. He struggles for an excruciating amount of time before realizing he can access the banking app via FaceID.

There has been some speculation that FaceID may be incorporated into some upcoming models of the iPad, though we’ll have to wait until Apple’s next event (likely in September) to find out for sure.

Read Full Article

Apple releases new iPad, FaceID ads

Apple has released a handful of new ads promoting the iPad’s portability and convenience over both laptops and traditional paper solutions. The 15-second ads focus on how the iPad can make even the most tedious things — travel, notes, paperwork, and ‘stuff’ — just a bit easier.

Three out of the four spots show the sixth-generation iPad, which was revealed at Apple’s education event in March, and which offers a lower-cost ($329 in the U.S.) option with Pencil support.

The ads were released on Apple’s international YouTube channels (UAE, Singapore, and United Kingdom).

This follows another 90-second ad released yesterday, focusing on FaceID. The commercial shows a man in a gameshow-type setting asked to remember the banking password he created earlier that morning. He struggles for an excruciating amount of time before realizing he can access the banking app via FaceID.

There has been some speculation that FaceID may be incorporated into some upcoming models of the iPad, though we’ll have to wait until Apple’s next event (likely in September) to find out for sure.

Read Full Article

Samsung’s new India phone factory is ‘world’s largest’

This week Samsung is opening what it’s calling the world’s largest mobile phone factory in the the world’s second largest smartphone market. Expansion, which will be fully complete in 2020, is expected to nearly double Noida (New Okhla Industrial Development Authority), India’s current phone producing capabilities from 68- to 120 million phones per year.

The electronics giant has been producing phones in the country for well over a decade (while the original factory dates back to 1996), while much of the competition has mostly been dabbling. Earlier this year, for instance, Apple started a manufacturing trial run of the iPhone 6S, after having previous done a small batch of the iPhone SE.

Along with bringing jobs, such localized manufacturing could also go a ways toward helping bring the cost of devices down. India’s government, naturally, is excitedly embracing the announcement as art of its “Make in India” initiative. As such, Prime Minister Narendra Modi was on-hand for the opening ceremony, along with South Korean president Moon Jae-in, repping his home country’s largest company.

“Our Noida factory, the world’s largest mobile factory, is a symbol of Samsung’s strong commitment to India, and a shining example of the success of the Government’s ‘Make in India’ program,” Samsung India CEO HC Hong said in a release tied to the news. “Samsung is a long-term partner of India. We ‘Make in India’, ‘Make for India’ and now, we will ‘Make for the World’. We are aligned with Government policies and will continue to seek their support to achieve our dream of making India a global export hub for mobile phones.”

India represents a massive — and growing — smartphone market. Last year the country passed the U.S., becoming the number two market after China. A commitment to local manufacturing will no doubt go a long way for Samsung, which currently ranks as the number two smartphone maker in the country, behind Xiaomi.

Read Full Article

The Great British Hack-Off summer festival hackathon will aim at Brexit

It’s very hard to know what the effects of Brexit are “on the ground”. Local news no longer has much of a business model to concentrate on specific subjects or campaigns. Social media is a mess of local facebook groups which only locals can see. MPs often ignore email / online campaigns from constituents.

The Great British Hack-Off aims to address this. In a 2-day intensive, overnight “hackathon” it aims to get a groundswell of interest in helping to improve local communities and economies and connect people with their decision makers.

It will be held by Tech For UK (Twitter, Hashtag:#GBhackoff, Instagram,

Facebook) the tech industry body calling for a meaningful people’s vote on Brexit, with the option to Remain, and anti-Brexit group Best For Britain .

Anyone interested can apply to attend the event via this form.

The Great British Hack-Off will ask a number of questions and try to build products to address the answers.

Are local community projects, some formerly funded by the EU, still going? Are they being replaced? What about local factories, businesses? What about health Services? Are local or central governments stepping in to help, or are people’s concerns being ignored? Is European and other foreign investment ebbing away from local communities or is it being replaced? Are local news sources sharing what is going on?

What are the human stories? How can social media and video be used to tell those stories best?

The Great British Hack-Off will be a festival of tech and creativity to address these issues.

Tech For UK says the ultimate goal will be to engage the tech community to help Best for Britain connect people in local communities to the information they need on Brexit and, in turn, connect them to their decision makers and MPs. Attendees to the Hackathon will also be able to work on their own projects and ideas related to Brexit.

Tech For UK says this will be the first event in a series, to be continued at other cities around the UK, not just in London.

Structured like a “Hackathon” it will be held at a central London venue, bringing together engineers, designers, storytellers, marketers, data scientists, designers, artists, journalists / PR / media people, analytics experts and social media influencers to work on these problems.

Participants will be selected from applications and given full instructions about the event.

They say there will be capacity for 120 people and the opportunity to stay over-night at the hackathon. Food and beverages will be provided.

Read Full Article

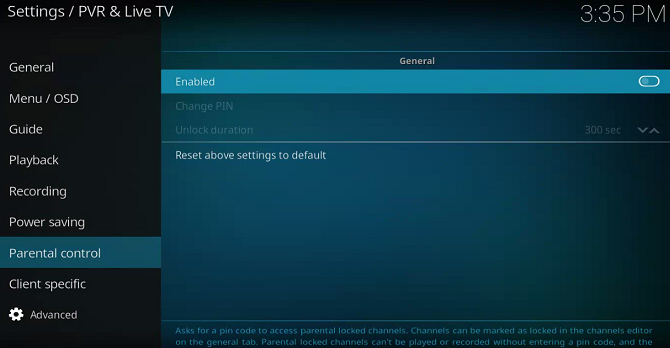

How to Enable Parental Controls on Kodi

Kodi is one of the best ways to watch classic movies, organize your locally-saved TV series and movies, and stream content to other screens in your home. But its real strength lies in its availability of add-ons.

The official Kodi repo boasts dozens of them, and there’s a near-endless supply of third-party content. However, not all Kodi add-ons are suitable for young eyes.

So, what can you do if you have kids who frequently stream media content on your Kodi device? Thankfully, Kodi comes with built-in parental controls. Let’s take a closer look.

How to Enable Parental Controls on Kodi

To turn on the parental controls and create a PIN, follow the instructions below:

- Open the Kodi app.

- Click on the Settings menu in the lower right-hand corner.

- Choose PVR and Live TV settings.

- In the menu on the left-hand side of the screen, select Parental Controls.

- Flick the toggle next to Enable into the On position.

- Click on Change PIN.

- Enter your desired lock code.

- Choose a duration of time before the channel will automatically re-lock itself.

Parental controls are just one of many hidden Kodi features. Here’s a list of a few other awesome features you might not know about:

- Share you Kodi library on multiple devices

- Set up a remote control for the Kodi app

- Watch live TV on Kodi

- Record live TV to watch later

- Play retro games on via Kodi on a Raspberry Pi

And remember, if all this sounds too complicated, check out our complete Kodi guide for beginners and as well as our collection of the most essential Kodi tips to know.

Image Credit: taromanaiai/Depositphotos

Read the full article: How to Enable Parental Controls on Kodi

Read Full Article

3 Email Folders You Should Be Using to Keep Your Inbox Organized

Email folders are one of the most important tools for keeping your inbox tidy. Without them, your mailbox soon becomes a jumbled mess of tasks, reference items, junk, and more.

Sifting these messages into folders lets you better keep track of them and keeps your inbox tidy. We’ve talked before about the only folders you need for inbox zero, but even if you aren’t an inbox zero person, here are three important email folders you should have anyway.

1. The “Follow Up” Email Folder

It’s really easy to forget a task that an emails requires as soon as you click away from it. Once it’s buried in your inbox, you’ll lose that fleeting thought (unless you add a task in your to-do app).

That’s why you need a folder called Follow Up. Put emails here that require some action on your part. As soon as they’re done, move them out. This way, you can open this folder anytime and complete outstanding work.

2. The “Reference” Email Folder

Your email probably contains lots of receipts, reminders, instructions, and other important documents you don’t want to lose. Searching is always an option for finding these, but that isn’t too efficient.

The Reference folder is the place for anything you’ll need in the future. Use it for important documents you’ll need later, and you’ll never lose track of important items again.

3. Due Date Folders for Tasks

For some people, folders based on categories don’t work well. If you’re someone like this, try creating folders based on due dates instead.

You could have a Today folder for urgent tasks, This Week folder for emails that you want to get to soon, and This Month for low-priority tasks. This lets you check in and complete tasks based on how important they are.

These three important folders are just suggestions—feel free to adapt them to what works for you! If you don’t want to categorize your emails manually, check out our guide to creating email filters that can do it for you.

Read the full article: 3 Email Folders You Should Be Using to Keep Your Inbox Organized

Read Full Article