The emergence of online hyperlocal services and e-commerce firms in India has led to the creation of about 200,000 jobs for blue-collar workers who deliver items to customers, according to industry estimates.

But it is also the kind of job that continues to see a high attrition rate. This means that companies like Zomato, Swiggy, Dunzo, Amazon India, and Flipkart have to replace a significant portion of their delivery workforce every three to four months.

“A small portion of these workers either switch jobs to go to a different delivery company, or they take up a different job,” said Madhav Krishna. “And a large chunk of them end up going back to their villages to work on their farms.”

“There is a cyclical migration phenomenon in India wherein a very large population migrates from villages to cities looking for a job. They work in cities for a few months and then return to their hometowns in time for the next crop harvesting season,” he said.

The attrition rate is so high that it has become a major challenge for companies to keep hiring new people, Krishna said. Additionally, with e-commerce and on-demand delivery space projected to grow four to five times in India by 2025, efficient supply acquisition is a major requirement for growth.

Three years ago, Krishna, who obtained his Masters in machine learning from Columbia University before moving to Bangalore, founded Vahan, a startup that is attempting to help these companies find potential blue-collar workers at scale.

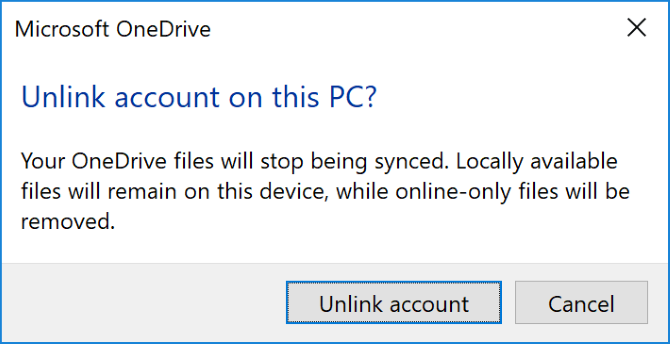

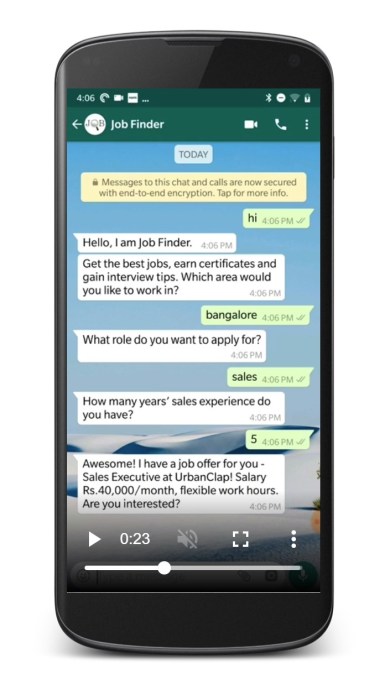

Vahan operates a WhatsApp Business account where it informs potential candidates of the available jobs in the industry. Interested candidates are presented with a series of qualifying questions, screened, and are authenticated by Vahan.

Much of this process, which takes merely minutes, is automated via an AI-driven chatbot, and Vahan (Hindi for “vehicle”) directs the shortlisted candidates to its clients for a walk-in interview and on-boarding. Its clients today include food delivery firms such as Zomato and Swiggy, hyperlocal concierge service Dunzo, and logistics company Lalamove.

“There are three things that need to come together: What do people want? What are their capabilities? And the third is, what is available in the market?” Krishna said. “It’s really a matching problem that we’re trying to solve. We are using data and machine learning to solve a complex matching problem.”

Y Combinator (YC) recently selected Vahan to participate in its Summer 2019 batch. In a statement, Adora Cheung, a partner at YC, said, “High mobile penetration coupled with massive growth in data consumption has made it possible for companies such as Vahan to reach millions of Indian via digital channels.”

“Vahan is addressing a space that is severely underserved and is poised for disruption via tech. Their use of WhatsApp is a great fit for reaching the blue-collar audience and their traction proves it. We are excited to back them and see them grow!”

Vahan makes revenue from taking a cut each time its suggested candidates become part of the corporate client’s workforce. For one of the aforementioned clients, the referral cut is about 7.5%, two sources at that company said. The amount also varies based on how long those candidates stick to the platform, they added.

In the last year, Vahan has amassed over a million users and has helped over 20,000 people secure a job. Each day, around 5,000 users check Vahan to look for a job. “This is all through zero-marketing spend. Job seekers are finding us through their friends,” Krishna said.

Vahan team

This is why Vahan operates on WhatsApp, too. “Most of the people we’re trying to help, they are not active on any online recruitment platform. WhatsApp is one of the few apps they use heavily,” Krishna said. “We send over 50,000 messages a day on WhatsApp and 95% of them get read. In fact, 15% get read in under 10 seconds”, he added.

WhatsApp, with more than 400 million users in India, has become a daily habit for much of the internet-connected population of the country. In recent years, many companies have built their businesses on top of the platform to use it as an effective distribution channel for their businesses.

For instance, Meesho, a social commerce app, helps millions of people in the country buy and sell products on WhatsApp. It recently received an investment from Facebook, the first of its kind by the social juggernaut in the country. Dunzo, which has been backed by Google, and Sharechat, which counts Twitter as one its investors, also started on Facebook’s instant messaging app.

As for Vahan, it plans to soon offer skiling courses to its users as they navigate more job opportunities in the future. It is also working with many companies for deeper system-level integrations.

As it sees the platform receive traction, Vahan also wants to expand its offering beyond delivery jobs.

Read Full Article