It’s hard to know which companies you can trust these days.

Apple deserves commendation for their refusal to bow to FBI demands for “backdoor access,” but plenty of others will hand over information the NSA without a second thought.

Here’s a look at organizations which we know have acquiesced and given the NSA access to user data. Use these services at your own peril.

1. Yahoo

Possibly the worst offender for handing over data to the NSA.

In truth, it’s already remarkable that people continue to use any of Yahoo’s services given its disastrous track record data breaches. Hackers compromised three billion accounts in August 2013, a further 500 million in late-2014, and yet another 200 million in late-2015.

However, that’s nothing compared to the company’s NSA collusion.

In 2016, it was revealed that Yahoo had specifically created a secret email filter that would monitor its users’ inboxes and automatically send anything that was flagged to the NSA; the NSA didn’t even need to ask for the data anymore.

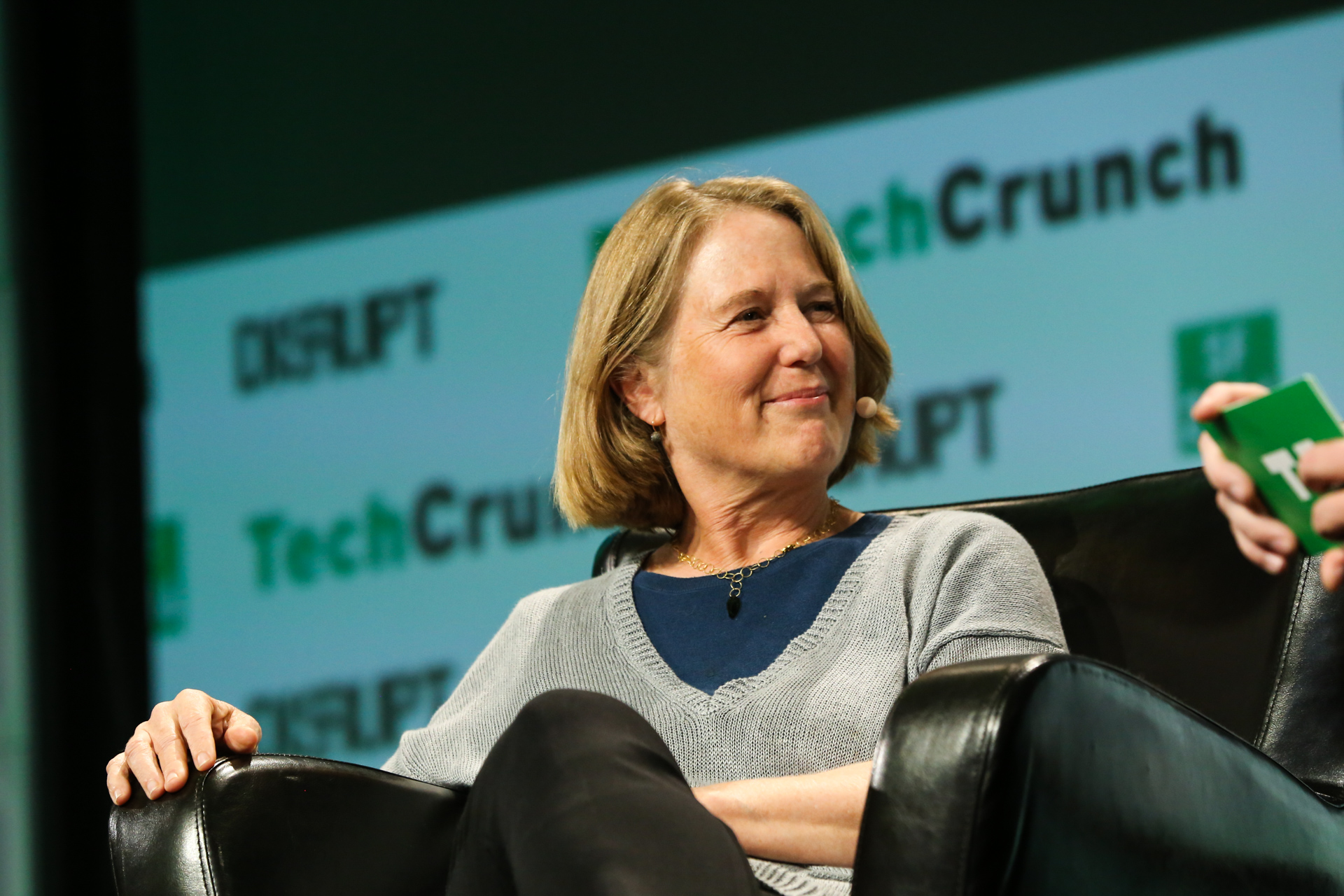

Then-CEO, Marissa Mayer, made the decision to create the filter. She did so without the knowledge of the company’s top security engineer, Alex Stamos. When he discovered the program, he quit on the spot.

Later, when other employees also found the filter, it was so invasive that they assumed it was the work of a malicious hacker.

2. Amazon

The initial furor over PRISM and the NSA has died down a bit, but don’t let that lull you into a false sense of security.

Amazon wasn’t even one of the companies listed in the original leaked NSA slides, yet it provides the NSA with a vast amount of users’ data.

In its most recent transparency report [PDF]—which became available at the end of December 2017—Amazon received:

- 1,618 subpoenas. The company fully complied with 42 percent and partially complied with 31 percent.

- 229 search warrants. The company fully complied with 44 percent and partially complied with 37 percent.

- 89 other court orders. The company fully complied with 52 percent and partially complied with 32 percent.

All the requests except one came from within the United States, and the figures mark an increase of nearly 15 percent compared to the previous six months.

Additionally, Amazon is not allowed to say how many national security letters it has received. The company can, however, say if it did not receive any. Amazon chose to declare that it had received between zero and 249.

3. Verizon

In June 2013, the UK’s Guardian newspaper obtained a leaked document which showed the NSA collected phone records from millions of Verizon customers every day.

Thanks to a top-secret court order from April of the same year, Verizon was required to give the NSA details on every single phone call in its systems, including both domestic and international calls.

The court order was valid for three months and expired in July 2013.

The order said Verizon had to hand over the numbers of both parties, the location data, the call duration, any unique identifiers, and the time and duration of all calls. All that metadata can reveal a lot about the people behind the calls.

Worse still, the ruling forbade Verizon from telling the public about the court order or the NSA’s request.

The program was codenamed Ragtime. Further leaked documents in late-2017 showed the Ragtime project was not only alive and well, but was also much broader in scope than first imagined.

Ragtime-P, which was the part of the program that the Verizon court order fell under, was still active. But the leak revealed there are 10 further variants. From a US citizen standpoint, the most troubling is Ragtime-USP (US person).

In theory, American citizens and permanent residents are protected from the collection of phone records after a 2015 ruling; however, the presence of Ragtime-USP calls that into question.

Unfortunately, we don’t know which companies are colluding with Ragtime-USP.

4. Facebook

Facebook has always been at the forefront of the NSA surveillance debate. Like Amazon, it publishes the details on the number of information requests it receives. And today, the NSA is requesting more information than ever before.

The most recent figures available are for the first six months of 2017. The data shows the NSA’s number of requests rose by 26 percent in that period compared to the previous six-month period.

It’s part of the same long-term upwards trend that’s seen the number of requests go from approximately 10,000 in the first six months of 2013 to more than 33,000 in the first six months of 2017.

And, at the same time that Facebook is receiving more requests, the company is also agreeing to more requests. In the first six months of 2013, it agreed to 79 percent of NSA requests. In the first six months of 2017, that had risen to 85 percent.

It goes on. A considerable 57 percent of the NSA requests that Facebook received included a non-disclosure clause. It means Facebook cannot tell the user that the NSA requested their data. If you think the clause has the potential to be abused, you’d be right; the number of non-disclosure orders in the first six months of 2017 rose by a staggering 50 percent compared to the last six months of 2016.

5. AT&T

Yahoo’s customized email filter might be the most brazen spying apparatus on this list, but AT&T arguably wins the battle for being the most complicit company.

In 2015, a batch of leaked NSA documents laid bare the relationship between the telecoms giant and the government agency.

One document revealed the association between the two was “unique and especially productive.” Another said AT&T was “highly collaborative” and praised the company for its “extreme willingness to help.”

A third document showed NSA employees were repeatedly reminded to be polite when visiting AT&T premises, saying “This is a partnership, not a contractual relationship.”

The collaboration program is called Fairview. It began way back in 1985 after the breakup of Ma Bell but ramped up to its current levels in the aftermath of 9/11. AT&T began giving the NSA access to its information within days of the attack; within the first month of operation, it gave the NSA more than 400 billion internet metadata records.

In 2011, the Fairview program went up another notch. The documents show that AT&T started providing the NSA with 1.1 billion domestic cell phone calling records every day.

And if you think you’re in the clear because you’re not an AT&T customer, think again. One of the leaked documents says the relationship with AT&T “provides unique accesses to other telecoms and ISPs.”

The cross-ISP surveillance is possible because of the way email data collection occurs. To tap a single email, parts of several other emails also need to be collected. For American-to-American communications, the law means the NSA will (theoretically) discard those emails immediately.

However, for foreigner-to-American and foreigner-to-foreigner mails, the law does not apply. As such, the NSA can engage in bulk collection without a warrant. Given so much of the web’s data flows through American cables, this loophole is especially lucrative to the agency.

Protecting Yourself Against Internet Surveillance

We’ve only discussed five of the most worrisome and high-profile cases of the NSA forcefully grabbing data from companies.

However, there are undoubtedly near-endless cases of smaller companies also handing over data—either willingly or by legal force. Sadly, those cases are not in the public domain and probably never will be.

Despite all the collection, there are still some steps you can take to protect yourself from excessive internet surveillance.

Read the full article: 5 Times Your Data Was Shockingly Handed Over to the NSA

Read Full Article

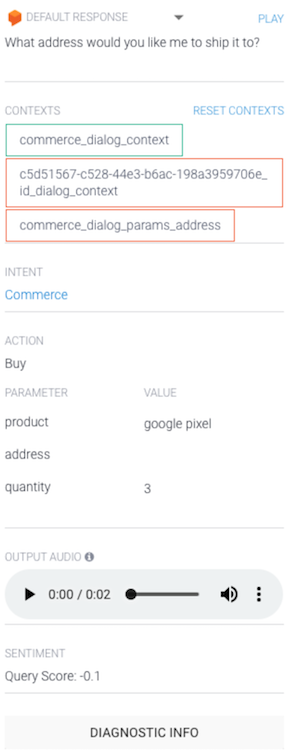

The other major new service Google is launching is Managed Istio (together with Apigee API Management for Istio) to help businesses manage and secure their microservices. The

The other major new service Google is launching is Managed Istio (together with Apigee API Management for Istio) to help businesses manage and secure their microservices. The