European Union lawmakers want online platforms to come up with their own systems to identify bot accounts.

This is as part of a voluntary Code of Practice the European Commission now wants platforms to develop and apply — by this summer — as part of a wider package of proposals it’s put out which are generally aimed at tackling the problematic spread and impact of disinformation online.

The proposals follow an EC-commissioned report last month, by its High-Level Expert Group, which recommended more transparency from online platforms to help combat the spread of false information online — and also called for urgent investment in media and information literacy education, and strategies to empower journalists and foster a diverse and sustainable news media ecosystem.

Bots, fake accounts, political ads, filter bubbles

In an announcement on Friday the Commission said it wants platforms to establish “clear marking systems and rules for bots” in order to ensure “their activities cannot be confused with human interactions”. It does not go into a greater level of detail on how that might be achieved. Clearly it’s intending platforms to have to come up with relevant methodologies.

Identifying bots is not an exact science — as academics conducting research into how information spreads online could tell you. The current tools that exist for trying to spot bots typically involve rating accounts across a range of criteria to give a score of how likely an account is to be algorithmically controlled vs human controlled. But platforms do at least have a perfect view into their own systems, whereas academics have had to rely on the variable level of access platforms are willing to give them.

Another factor here is that given the sophisticated nature of some online disinformation campaigns — the state-sponsored and heavily resourced efforts by Kremlin backed entities such as Russia’s Internet Research Agency, for example — if the focus ends up being algorithmically controlled bots vs IDing bots that might have human agents helping or controlling them, plenty of more insidious disinformation agents could easily slip through the cracks.

That said, other measures in the EC’s proposals for platforms include stepping up their existing efforts to shutter fake accounts and being able to demonstrate the “effectiveness” of such efforts — so greater transparency around how fake accounts are identified and the proportion being removed (which could help surface more sophisticated human-controlled bot activity on platforms too).

Another measure from the package: The EC says it wants to see “significantly” improved scrutiny of ad placements — with a focus on trying to reduce revenue opportunities for disinformation purveyors.

Restricting targeting options for political advertising is another component. “Ensure transparency about sponsored content relating to electoral and policy-making processes,” is one of the listed objectives on its fact sheet — and ad transparency is something Facebook has said it’s prioritizing since revelations about the extent of Kremlin disinformation on its platform during the 2016 US presidential election, with expanded tools due this summer.

The Commission also says generally that it wants platforms to provide “greater clarity about the functioning of algorithms” and enable third-party verification — though there’s no greater level of detail being provided at this point to indicate how much algorithmic accountability it’s after from platforms.

We’ve asked for more on its thinking here and will update this story with any response. It looks to be seeking to test the water to see how much of the workings of platforms’ algorithmic blackboxes can be coaxed from them voluntarily — such as via measures targeting bots and fake accounts — in an attempt to stave off formal and more fulsome regulations down the line.

Filter bubbles also appear to be informing the Commission’s thinking, as it says it wants platforms to make it easier for users to “discover and access different news sources representing alternative viewpoints” — via tools that let users customize and interact with the online experience to “facilitate content discovery and access to different news sources”.

Though another stated objective is for platforms to “improve access to trustworthy information” — so there are questions about how those two aims can be balanced, i.e. without efforts towards one undermining the other.

On trustworthiness, the EC says it wants platforms to help users assess whether content is reliable using “indicators of the trustworthiness of content sources”, as well as by providing “easily accessible tools to report disinformation”.

In one of several steps Facebook has taken since 2016 to try to tackle the problem of fake content being spread on its platform the company experimented with putting ‘disputed’ labels or red flags on potentially untrustworthy information. However the company discontinued this in December after research suggested negative labels could entrench deeply held beliefs, rather than helping to debunk fake stories.

Instead it started showing related stories — containing content it had verified as coming from news outlets its network of fact checkers considered reputable — as an alternative way to debunk potential fakes.

The Commission’s approach looks to be aligning with Facebook’s rethought approach — with the subjective question of how to make judgements on what is (and therefore what isn’t) a trustworthy source likely being handed off to third parties, given that another strand of the code is focused on “enabling fact-checkers, researchers and public authorities to continuously monitor online disinformation”.

Since 2016 Facebook has been leaning heavily on a network of local third party ‘partner’ fact-checkers to help identify and mitigate the spread of fakes in different markets — including checkers for written content and also photos and videos, the latter in an effort to combat fake memes before they have a chance to go viral and skew perceptions.

In parallel Google has also been working with external fact checkers, such as on initiatives such as highlighting fact-checked articles in Google News and search.

The Commission clearly approves of the companies reaching out to a wider network of third party experts. But it is also encouraging work on innovative tech-powered fixes to the complex problem of disinformation — describing AI (“subject to appropriate human oversight”) as set to play a “crucial” role for “verifying, identifying and tagging disinformation”, and pointing to blockchain as having promise for content validation.

Specifically it reckons blockchain technology could play a role by, for instance, being combined with the use of “trustworthy electronic identification, authentication and verified pseudonyms” to preserve the integrity of content and validate “information and/or its sources, enable transparency and traceability, and promote trust in news displayed on the Internet”.

It’s one of a handful of nascent technologies the executive flags as potentially useful for fighting fake news, and whose development it says it intends to support via an existing EU research funding vehicle: The Horizon 2020 Work Program.

It says it will use this program to support research activities on “tools and technologies such as artificial intelligence and blockchain that can contribute to a better online space, increasing cybersecurity and trust in online services”.

It also flags “cognitive algorithms that handle contextually-relevant information, including the accuracy and the quality of data sources” as a promising tech to “improve the relevance and reliability of search results”.

The Commission is giving platforms until July to develop and apply the Code of Practice — and is using the possibility that it could still draw up new laws if it feels the voluntary measures fail as a mechanism to encourage companies to put the sweat in.

It is also proposing a range of other measures to tackle the online disinformation issue — including:

- An independent European network of fact-checkers: The Commission says this will establish “common working methods, exchange best practices, and work to achieve the broadest possible coverage of factual corrections across the EU”; and says they will be selected from the EU members of the International Fact Checking Network which it notes follows “a strict International Fact Checking NetworkCode of Principles”

- A secure European online platform on disinformation to support the network of fact-checkers and relevant academic researchers with “cross-border data collection and analysis”, as well as benefitting from access to EU-wide data

- Enhancing media literacy: On this it says a higher level of media literacy will “help Europeans to identify online disinformation and approach online content with a critical eye”. So it says it will encourage fact-checkers and civil society organisations to provide educational material to schools and educators, and organise a European Week of Media Literacy

- Support for Member States in ensuring the resilience of elections against what it dubs “increasingly complex cyber threats” including online disinformation and cyber attacks. Stated measures here include encouraging national authorities to identify best practices for the identification, mitigation and management of risks in time for the 2019 European Parliament elections. It also notes work by a Cooperation Group, saying “Member States have started to map existing European initiatives on cybersecurity of network and information systems used for electoral processes, with the aim of developing voluntary guidance” by the end of the year. It also says it will also organise a high-level conference with Member States on cyber-enabled threats to elections in late 2018

- Promotion of voluntary online identification systems with the stated aim of improving the “traceability and identification of suppliers of information” and promoting “more trust and reliability in online interactions and in information and its sources”. This includes support for related research activities in technologies such as blockchain, as noted above. The Commission also says it will “explore the feasibility of setting up voluntary systems to allow greater accountability based on electronic identification and authentication scheme” — as a measure to tackle fake accounts. “Together with others actions aimed at improving traceability online (improving the functioning, availability and accuracy of information on IP and domain names in the WHOIS system and promoting the uptake of the IPv6 protocol), this would also contribute to limiting cyberattacks,” it adds

- Support for quality and diversified information: The Commission is calling on Member States to scale up their support of quality journalism to ensure a pluralistic, diverse and sustainable media environment. The Commission says it will launch a call for proposals in 2018 for “the production and dissemination of quality news content on EU affairs through data-driven news media”

It says it will aim to co-ordinate its strategic comms policy to try to counter “false narratives about Europe” — which makes you wonder whether debunking the output of certain UK tabloid newspapers might fall under that new EC strategy — and also more broadly to tackle disinformation “within and outside the EU”.

Commenting on the proposals in a statement, the Commission’s VP for the Digital Single Market, Andrus Ansip, said: “Disinformation is not new as an instrument of political influence. New technologies, especially digital, have expanded its reach via the online environment to undermine our democracy and society. Since online trust is easy to break but difficult to rebuild, industry needs to work together with us on this issue. Online platforms have an important role to play in fighting disinformation campaigns organised by individuals and countries who aim to threaten our democracy.”

The EC’s next steps now will be bringing the relevant parties together — including platforms, the ad industry and “major advertisers” — in a forum to work on greasing cooperation and getting them to apply themselves to what are still, at this stage, voluntary measures.

“The forum’s first output should be an EU–wide Code of Practice on Disinformation to be published by July 2018, with a view to having a measurable impact by October 2018,” says the Commission.

The first progress report will be published in December 2018. “The report will also examine the need for further action to ensure the continuous monitoring and evaluation of the outlined actions,” it warns.

And if self-regulation fails…

In a fact sheet further fleshing out its plans, the Commission states: “Should the self-regulatory approach fail, the Commission may propose further actions, including regulatory ones targeted at a few platforms.”

And for “a few” read: Mainstream social platforms — so likely the big tech players in the social digital arena: Facebook, Google, Twitter.

For potential regulatory actions tech giants only need look to Germany, where a 2017 social media hate speech law has introduced fines of up to €50M for platforms that fail to comply with valid takedown requests within 24 hours for simple cases, for an example of the kind of scary EU-wide law that could come rushing down the pipe at them if the Commission and EU states decide its necessary to legislate.

Though justice and consumer affairs commissioner, Vera Jourova, signaled in January that her preference on hate speech at least was to continue pursuing the voluntary approach — though she also said some Member State’s ministers are open to a new EU-level law should the voluntary approach fail.

In Germany the so-called NetzDG law has faced criticism for pushing platforms towards risk aversion-based censorship of online content. And the Commission is clearly keen to avoid such charges being leveled at its proposals, stressing that if regulation were to be deemed necessary “such [regulatory] actions should in any case strictly respect freedom of expression”.

Commenting on the Code of Practice proposals, a Facebook spokesperson told us: “People want accurate information on Facebook – and that’s what we want too. We have invested in heavily in fighting false news on Facebook by disrupting the economic incentives for the spread of false news, building new products and working with third-party fact checkers.”

A Twitter spokesman declined to comment on the Commission’s proposals but flagged contributions he said the company is already making to support media literacy — including an event last week at its EMEA HQ.

At the time of writing Google had not responded to a request for comment.

Last month the Commission did further tighten the screw on platforms over terrorist content specifically — saying it wants them to get this taken down within an hour of a report as a general rule. Though it still hasn’t taken the step to cement that hour ‘rule’ into legislation, also preferring to see how much action it can voluntarily squeeze out of platforms via a self-regulation route.

Read Full Article

Read Full Article

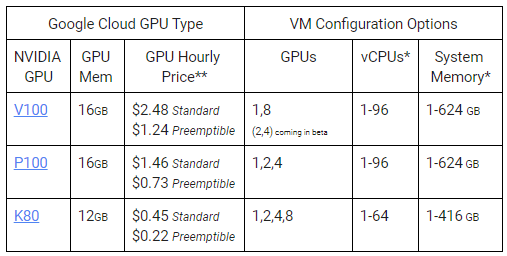

All of that power comes at a price, of course. An hour of V100 usage costs $2.48, while the P100 will set you back $1.46 per hour (these are the standard prices, with the preemptible machines coming in at half that price). In addition, you’ll also need to pay Google to run your regular virtual machine or containers.

All of that power comes at a price, of course. An hour of V100 usage costs $2.48, while the P100 will set you back $1.46 per hour (these are the standard prices, with the preemptible machines coming in at half that price). In addition, you’ll also need to pay Google to run your regular virtual machine or containers.