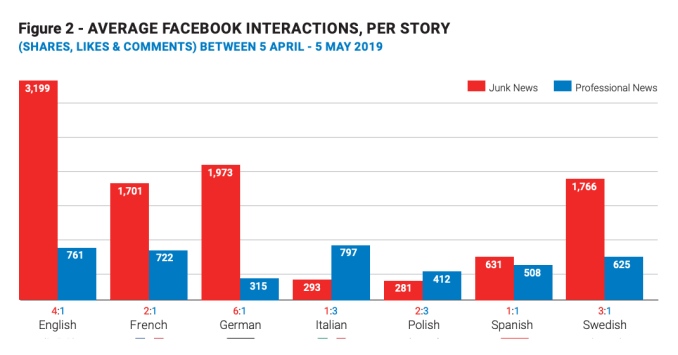

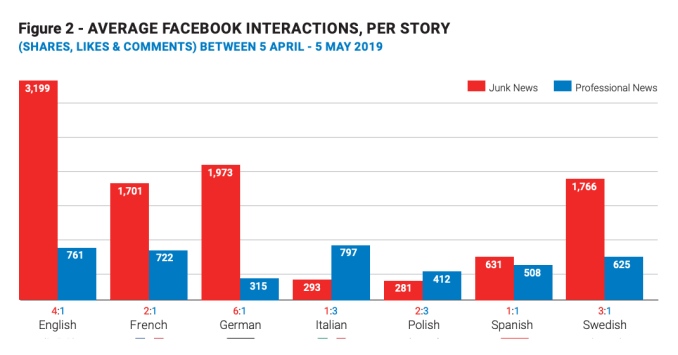

A study carried out by academics at Oxford University to investigate how junk news is being shared on social media in Europe ahead of regional elections this month has found individual stories shared on Facebook’s platform can still hugely outperform the most important and professionally produced news stories, drawing as much as 4x the volume of Facebook shares, likes, and comments.

The study, conducted for the Oxford Internet Institute’s (OII) Computational Propaganda Project, is intended to respond to widespread concern about the spread of online political disinformation on EU elections which take place later this month, by examining pre-election chatter on Facebook and Twitter in English, French, German, Italian, Polish, Spanish, and Swedish.

Junk news in this context refers to content produced by known sources of political misinformation — aka outlets that are systematically producing and spreading “ideologically extreme, misleading, and factually incorrect information” — with the researchers comparing interactions with junk stories from such outlets to news stories produced by the most popular professional news sources to get a snapshot of public engagement with sources of misinformation ahead of the EU vote.

As we reported last year, the Institute also launched a junk news aggregator ahead of the US midterms to help Internet users get a handle on manipulative politically-charged content that might be hitting their feeds.

In the EU the European Commission has responded to rising concern about the impact of online disinformation on democratic processes by stepping up pressure on platforms and the adtech industry — issuing monthly progress reports since January after the introduction of a voluntary code of practice last year intended to encourage action to squeeze the spread of manipulative fakes. Albeit, so far these ‘progress’ reports have mostly boiled down to calls for less foot-dragging and more action.

One tangible result last month was Twitter introducing a report option for misleading tweets related to voting ahead of the EU vote, though again you have to wonder what took it so long given that online election interference is hardly a new revelation. (The OII study is also just the latest piece of research to bolster the age old maxim that falsehoods fly and the truth comes limping after.)

The study also examined how junk news spread on Twitter during the pre-EU election period, with the researchers finding that less than 4% of sources circulating on Twitter’s platform were junk news (or “known Russian sources”) — with Twitter users sharing far more links to mainstream news outlets overall (34%) over the study period.

Although the Polish language sphere was an exception — with junk news making up a fifth (21%) of EU election-related Twitter traffic in that outlying case.

Returning to Facebook, while the researchers do note that many more users interact with mainstream content overall via its platform, noting that mainstream publishers have a higher following and so “wider access to drive activity around their content” and meaning their stories “tend to be seen, liked, and shared by far more users overall”, they also point out that junk news still packs a greater per story punch — likely owing to the use of tactics such as clickbait, emotive language, and outragemongering in headlines which continues to be shown to generate more clicks and engagement on social media.

It’s also of course much quicker and easier to make some shit up vs the slower pace of doing rigorous professional journalism — so junk news purveyors can get out ahead of news events also as an eyeball-grabbing strategy to further the spread of their cynical BS. (And indeed the researchers go on to say that most of the junk news sources being shared during the pre-election period “either sensationalized or spun political and social events covered by mainstream media sources to serve a political and ideological agenda”.)

“While junk news sites were less prolific publishers than professional news producers, their stories tend to be much more engaging,” they write in a data memo covering the study. “Indeed, in five out of the seven languages (English, French, German, Spanish, and Swedish), individual stories from popular junk news outlets received on average between 1.2 to 4 times as many likes, comments, and shares than stories from professional media sources.

“In the German sphere, for instance, interactions with mainstream stories averaged only 315 (the lowest across this sub-sample) while nearing 1,973 for equivalent junk news stories.”

To conduct the research the academics gathered more than 584,000 tweets related to the European parliamentary elections from more than 187,000 unique users between April 5 and April 20 using election-related hashtags — from which they extracted more than 137,000 tweets containing a URL link, which pointed to a total of 5,774 unique media sources.

Sources that were shared 5x or more across the collection period were manually classified by a team of nine multi-lingual coders based on what they describe as “a rigorous grounded typology developed and refined through the project’s previous studies of eight elections in several countries around the world”.

Each media source was coded individually by two separate coders, via which technique they say was able to successfully label nearly 91% of all links shared during the study period.

The five most popular junk news sources were extracted from each language sphere looked at — with the researchers then measuring the volume of Facebook interactions with these outlets between April 5 and May 5, using the NewsWhip Analytics dashboard.

They also conducted a thematic analysis of the 20 most engaging junk news stories on Facebook during the data collection period to gain a better understanding of the different political narratives favoured by junk news outlets ahead of an election.

On the latter front they say the most engaging junk narratives over the study period “tend to revolve around populist themes such as anti-immigration and Islamophobic sentiment, with few expressing Euroscepticism or directly mentioning European leaders or parties”.

Which suggests that EU-level political disinformation is a more issue-focused animal (and/or less developed) — vs the kind of personal attacks that have been normalized in US politics (and were richly and infamously exploited by Kremlin-backed anti-Clinton political disinformation during the 2016 US presidential election, for example).

This is likely also because of a lower level of political awareness attached to individuals involved in EU institutions and politics, and the multi-national state nature of the pan-EU project — which inevitably bakes in far greater diversity. (We can posit that just as it aids robustness in biological life, diversity appears to bolster democratic resilience vs political nonsense.)

The researchers also say they identified two noticeable patterns in the thematic content of junk stories that sought to cynically spin political or social news events for political gain over the pre-election study period.

“Out of the twenty stories we analysed, 9 featured explicit mentions of ‘Muslims’ and the Islamic faith in general, while seven mentioned ‘migrants’, ‘immigration’, or ‘refugees’… In seven instances, mentions of Muslims and immigrants were coupled with reporting on terrorism or violent crime, including sexual assault and honour killings,” they write.

“Several stories also mentioned the Notre Dame fire, some propagating the idea that the arson had been deliberately plotted by Islamist terrorists, for example, or suggesting that the French government’s reconstruction plans for the cathedral would include a minaret. In contrast, only 4 stories featured Euroscepticism or direct mention of European Union leaders and parties.

“The ones that did either turned a specific political figure into one of derision – such as Arnoud van Doorn, former member of PVV, the Dutch nationalist and far-right party of Geert Wilders, who converted to Islam in 2012 – or revolved around domestic politics. One such story relayed allegations that Emmanuel Macron had been using public taxes to finance ISIS jihadists in Syrian camps, while another highlighted an offer by Vladimir Putin to provide financial assistance to rebuild Notre Dame.”

Taken together, the researchers conclude that “individuals discussing politics on social media ahead of the European parliamentary elections shared links to high-quality news content, including high volumes of content produced by independent citizen, civic groups and civil society organizations, compared to other elections we monitored in France, Sweden, and Germany”.

Which suggests that attempts to manipulate the pan-EU election are either less prolific or, well, less successful than those which have targeted some recent national elections in EU Member States. And logic would suggest that co-ordinating election interference across a 28-Member State bloc does require greater co-ordination and resource vs trying to meddle in a single national election — on account of the multiple countries, cultures, languages and issues involved.

We’ve reached out to Facebook for comment on the study’s findings.

The company has put a heavy focus on publicizing its self-styled ‘election security’ efforts ahead of the EU election. Though it has mostly focused on setting up systems to control political ads — whereas junk news purveyors are simply uploading regular Facebook ‘content’ at the same time as wrapping it in bogus claims of ‘journalism’ — none of which Facebook objects to. All of which allows would-be election manipulators to pass off junk views as online news, leveraging the reach of Facebook’s platform and its attention-hogging algorithms to amplify hateful nonsense. While any increase in engagement is a win for Facebook’s ad business, so er…

Read Full Article

Read Full Article

With this broader concept in mind, Daisie began fundraising in San Francisco shortly after the beta launch. The round initially closed in October 2018, but was more recently reopened to allow Dror’s investment.

With this broader concept in mind, Daisie began fundraising in San Francisco shortly after the beta launch. The round initially closed in October 2018, but was more recently reopened to allow Dror’s investment.

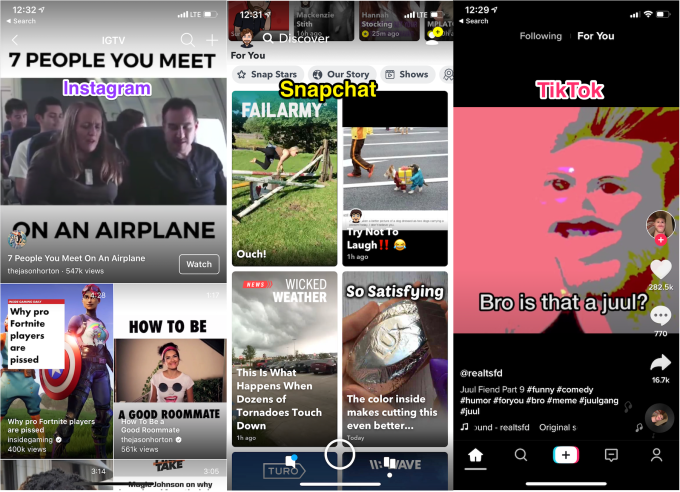

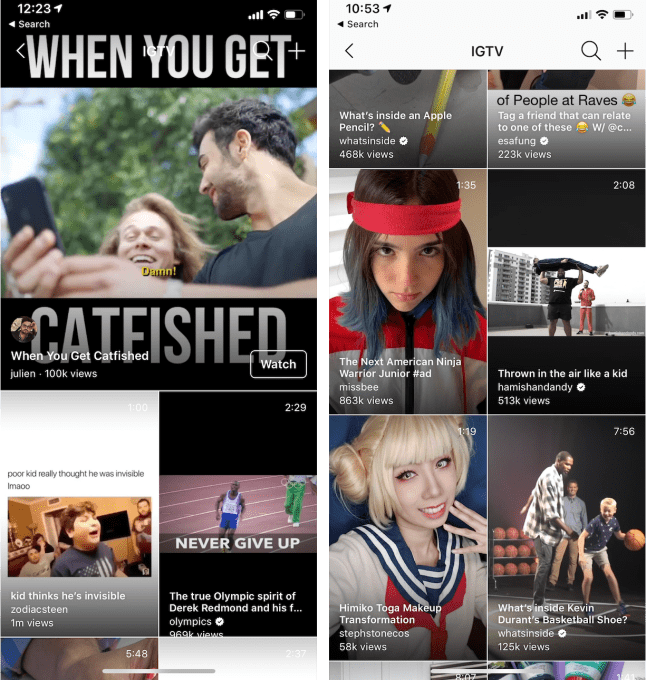

IGTV has ditched its category-based navigation system’s tabs like “For You”, “Following”, “Popular”, and “Continue Watching” for just one central feed of algorithmically suggested videos — much like TikTok. This affords a more lean-back, ‘just show me something fun’ experience that relies on Instagram’s AI to analyze your behavior and recommend content instead of putting the burden of choice on the viewer.

IGTV has ditched its category-based navigation system’s tabs like “For You”, “Following”, “Popular”, and “Continue Watching” for just one central feed of algorithmically suggested videos — much like TikTok. This affords a more lean-back, ‘just show me something fun’ experience that relies on Instagram’s AI to analyze your behavior and recommend content instead of putting the burden of choice on the viewer.