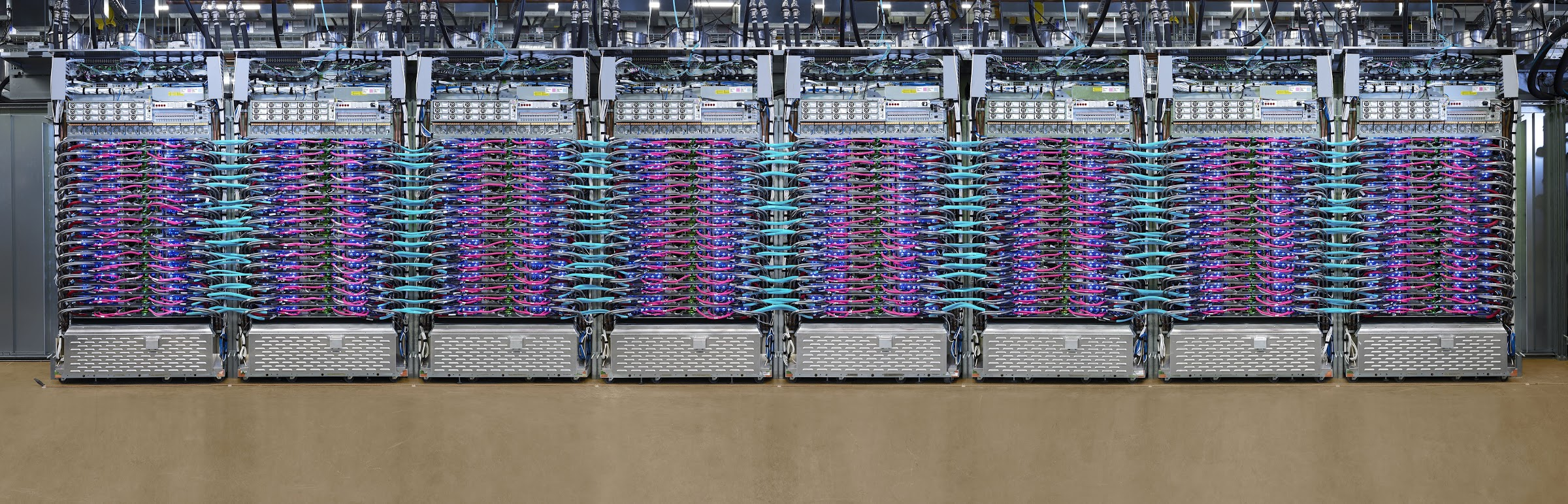

Google today announced that its second- and third-generation Cloud TPU Pods — its scalable cloud-based supercomputers with up to 1,000 of its custom Tensor Processing Units — are now publicly available in beta.

The latest-generation v3 models are especially powerful and are liquid-cooled. Each pod can deliver up to 100 petaFLOPS. As Google notes, that raw computing power puts it within the top 5 supercomputers worldwide, but you need to take that number with a grain of salt given that the TPU pods operate at a far lower numerical precision.

You don’t need to use a full TPU Pod, though. Google also lets developers rent ‘slices’ of these machines. Either way, though, we’re talking about a very powerful machine that can train a standard ResNet-50 image classification model using the ImageNet dataset in two minutes.

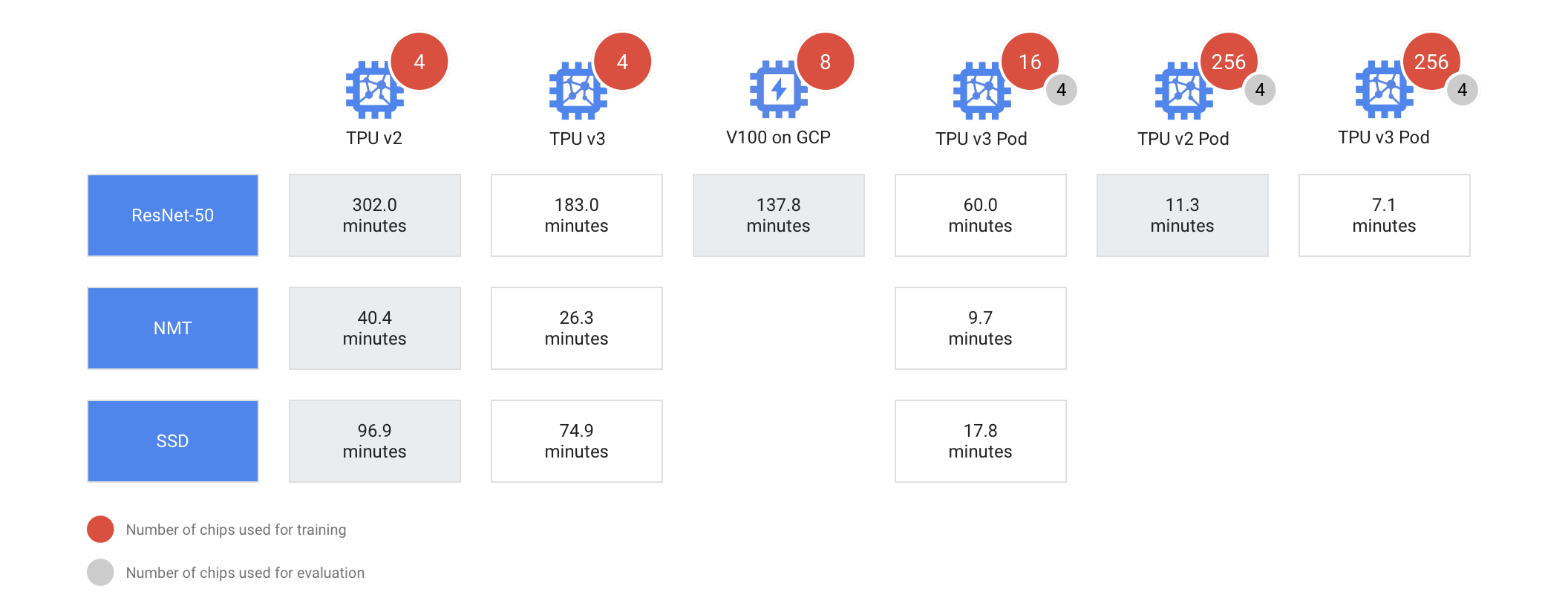

The TPU v2 Pods feature up to 512 cores and are a bit slower than the v3 ones, of course. When using 265 TPUs, for example, a v2 Pod will train the ResNet-50 model in 11.3 minutes while a v3 Pod will only take 7.1 minutes. Using a single TPU, that’ll take 302 minutes.

Unsurprisingly, Google argues that Pods are best used when you need to quickly train a model (and theprice isn’t that much of an issue), need higher accuracy using larger datasets with millions of labeled examples, or when you want rapidly prototype new models.

Read Full Article

No comments:

Post a Comment