Consumer expectations are higher than ever as a new generation of shoppers look to shop for experiences rather than commodities. They expect instant and highly-tailored (pun intended?) customer service and recommendations across any retail channel.

To be forward-looking, brands and retailers are turning to startups in image recognition and machine learning to know, at a very deep level, what each consumer’s current context and personal preferences are and how they evolve. But while brands and retailers are sitting on enormous amounts of data, only a handful are actually leveraging it to its full potential.

To provide hyper-personalization in real time, a brand needs a deep understanding of its products and customer data. Imagine a case where a shopper is browsing the website for an edgy dress and the brand can recognize the shopper’s context and preference in other features like style, fit, occasion, color etc., then use this information implicitly while fetching similar dresses for the user.

Another situation is where the shopper searches for clothes inspired by their favorite fashion bloggers or Instagram influencers using images in place of text search. This would shorten product discovery time and help the brand build a hyper-personalized experience which the customer then rewards with loyalty.

With the sheer amount of products being sold online, shoppers primarily discover products through category or search-based navigation. However, inconsistencies in product metadata created by vendors or merchandisers lead to poor recall of products and broken search experiences. This is where image recognition and machine learning can deeply analyze enormous data sets and a vast assortment of visual features that exist in a product to automatically extract labels from the product images and improve the accuracy of search results.

Why is image recognition better than ever before?

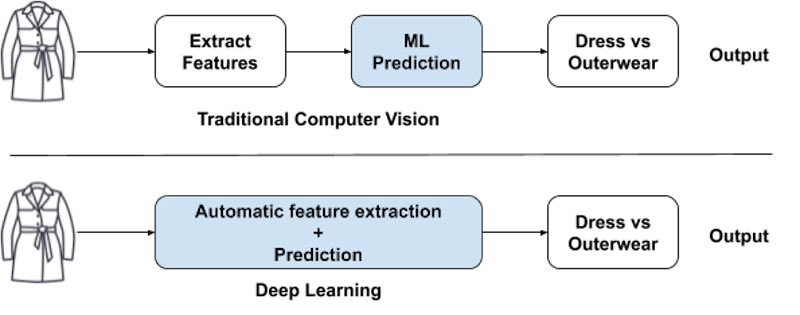

While computer vision has been around for decades, it has recently become more powerful, thanks to the rise of deep neural networks. Traditional vision techniques laid the foundation for learning edges, corners, colors and objects from input images but it required human engineering of the features to be looked at in the images. Also, the traditional algorithms found it difficult to cope up with the changes in illumination, viewpoint, scale, image quality, etc.

Deep learning, on the other hand, takes in massive training data and more computation power and delivers the horsepower to extract features from unstructured data sets and learn without human intervention. Inspired by the biological structure of the human brain, deep learning uses neural networks to analyze patterns and find correlations in unstructured data such as images, audio, video and text. DNNs are at the heart of today’s AI resurgence as they allow more complex problems to be tackled and solved with higher accuracy and less cumbersome fine-tuning.

No comments:

Post a Comment