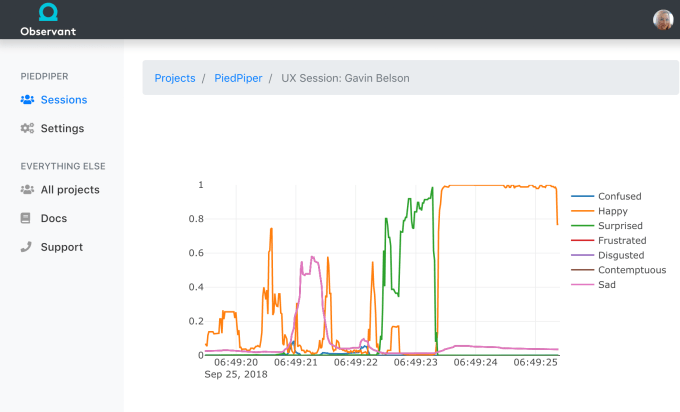

Observant has found a new way to use the fancy infrared depth sensors included on the iPhone X, XS and XR: analyzing people’s facial expression in order to understand how they’re responding to a product or a piece of content.

Observant was part of the winter batch of startups at accelerator Y Combinator, but was still in stealth mode on Demo Day. It was created by the same company behind bug reporting product Buglife, and CEO Dave Schukin said his team created it because they to find better ways to capture user reactions.

We’ve written about other startups that try to do something similar using webcams and eye tracking, but Schukin (who co-founded the company with CTO Daniel DeCovnick) argued that those approaches are less accurate than Observant’s — in particular, he argued that they don’t capture subtler “microexpressions,” and they don’t do as well in low-light settings.

In contrast, he said the infrared depth sensors can map your face in high levels of detail regardless of lighting, and Observant has also created deep learning technology to translate the facial data into emotions in real-time.

Observant has created an SDK that can be installed in any iOS app, and it can provide either a full, real-time stream of emotional analysis, or individual snapshots of user responses tied specific in-app events. The product is currently invite-only, but Schukin said it’s already live in some retail and ecommerce apps, and it’s also being used in focus group testing.

Of course, the idea of your iPhone capturing all your facial expressions might sound a little creepy, so he emphasized that as Observant brings on new customers, it’s working with them to ensure that when the data is collected, “users are crystal clear how it’s being used.” Plus, all the analysis actually happens on the users’ device, so no facial footage or biometric data gets uploaded.

Eventually, Schukin suggested that the technology could be applied more broadly, whether that’s by helping companies to provide better recommendations, introduce more “emotional intelligence” to their chatbots or even detect sleepy driving.

As for whether Observant can achieve those goals when it’s only working on three phones, Schukin said, “When we started working on this almost a year go, the iPhone X was the only iPhone [with these depth sensors]. Our thinking at the time was, we know how Apple works, we know how this technology propagates over time, so we’re going to place a bet that eventually these depth sensors will be on every iPhone and every iPad, and they’ll be emulated and replicated on Android.”

So while it’s too early to say whether Observant’s bet will pay off, Schukin pointed to the fact that these sensors have expanded from one to three iPhone models as a sign that things are moving in the right direction.

Read Full Article

No comments:

Post a Comment