Rapid development of more accurate simulator engines has given robotics researchers a unique opportunity to generate sufficient amounts of data that can be used to train robotic policies for real-world deployment. However, moving trained policies from “sim-to-real” remains one of the greatest challenges of modern robotics, due to the subtle differences encountered between the simulation and real domains, termed the “reality gap”. While some recent approaches leverage existing data, such as imitation learning and offline reinforcement learning, to prepare a policy for the reality gap, a more common approach is to simply provide more data by varying properties of the simulated environment, a process called domain randomization.

However, domain randomization can sacrifice performance for stability, as it seeks to optimize for a decent, stable policy across all tasks, but offers little room for improving the policy on a specific task. This lack of a common optimal policy between simulation and reality is frequently a problem in robotic locomotion applications, where there are varying physical forces at play, such as leg friction, body mass, and terrain differences. For example, given the same initial conditions for the robot’s position and balance, the surface type will determine the optimal policy — for an incoming flat surface encountered in simulation, the robot could accelerate to a higher speed, while for an incoming rugged and bumpy surface encountered in the real world, it should walk slowly and carefully to prevent falling.

In “Rapidly Adaptable Legged Robots via Evolutionary Meta-Learning”, we present a particular type of meta-learning based on evolutionary strategies (ES), an approach generally believed to only work well in simulation, we can effectively and efficiently adapt a policy to a real-world robot in a completely model-free manner. Compared to previous approaches for adapting meta-policies, such as standard policy gradients which do not allow sim-to-teal adaptation, ES enables a robot to quickly overcome the reality gap and adapt to dynamic changes in the real world, some of which may not be encountered in simulation. This represents the first instance of successfully using ES for on-robot adaptation.

This research falls under the general class of meta-learning techniques, and is demonstrated on a legged robot. At a high level, meta-learning learns to solve an incoming task quickly without completely retraining from scratch, by combining past experiences with small amounts of experience from the incoming task. This is especially beneficial in the sim-to-real case, where most of the past experiences come cheaply from simulation, while a minimal, yet necessary amount of experience is generated from the real world task. The simulation experiences allow the policy to possess a general level of behavior for solving a distribution of tasks, while the real-world experiences allow the policy to fine-tune specifically to the real-world task at hand.

In order to train a policy to meta-learn, it is necessary to encourage a policy to adapt during simulation. Normally, this can be achieved by applying model-agnostic meta-learning (MAML), which searches for a meta-policy that can adapt to a specific task quickly using small amounts of task-specific data. The standard approach to computing such meta-policies is by using policy gradient methods, which seek to improve the likelihood of selecting the same action given the same state. In order to determine the likelihood of a given action, the policy must be stochastic, allowing for the action selected by the policy to have a randomized component. The real-world environment for deploying such robotic policies is also highly stochastic, as there can be slight differences in motion arising naturally, even if starting from the exact same state and action sequence. The combination of using a stochastic policy inside a stochastic environment creates two conflicting objectives:

- Decreasing the policy’s stochasticity may be crucial, as otherwise the high-noise problem might be exacerbated by the additional randomness from the policy’s actions.

- However, increasing the policy’s stochasticity may also benefit exploration, as the policy needs to use random actions to probe the type of environment to which it adapts.

Evolutionary Strategies in Robotics

Instead, we resolve these challenges by applying ES-MAML, an algorithm that leverages a drastically different paradigm for high-dimensional optimization — evolutionary strategies. The ES-MAML approach updates the policy based solely on the sum of rewards collected by the agent in the environment. The function used for optimizing the policy is black-box, mapping the policy parameters directly to this reward. Unlike policy gradient methods, this approach does not need to collect state/action/reward tuples and does not need to estimate action likelihoods. This allows the use of deterministic policies and exploration based on parameter changes and avoiding the conflict between stochasticity in the policy and in the environment.

In this paradigm, querying usually involves running episodes in the simulator, but we show that ES can be applied also for episodes collected on real hardware. ES optimization can be easily distributed and also works well for training efficient compact policies, a phenomenon with profound robotic implications, since policies with fewer parameters can be easier deployed on real hardware and often lead to more efficient inference and power usage. We confirm the effectiveness of ES in training compact policies by learning adaptable meta-policies with <130 parameters.

The ES optimization paradigm is very flexible. It can be used to optimize non-differentiable objectives, such as the total reward objective in our robotics case. It also works in the presence of substantial (potentially adversarial) noise. In addition, the most recent forms of ES methods (e.g., guided ES) are much more sample-efficient than previous versions.

This flexibility is critical for efficient adaptation of locomotion meta-policies. Our results show that adaptation with ES can be conducted with a small number of additional on-robot episodes. Thus, ES is no longer just an attractive alternative to the state-of-the-art algorithms, but defines a new state of the art for several challenging RL tasks.

Adaptation in Simulation

We first examine the types of adaptation that emerge when training with ES-MAML in simulation. When testing the policy in simulation, we found that the meta-policy forces the robot to fall down when the dynamics become too unstable, whereas the adapted policy allows the robot to re-stabilize and walk again. Furthermore, when the robot’s leg settings change, the meta-policy de-synchronizes the robot’s legs causing the robot to turn sharply, while the adapted policy corrects the robot so it can walk straight again.

Despite the good performance of ES-MAML in simulation, applying it to a real robot is still a challenge. To effectively adapt in the noisy environment of the real world while requiring as little real-world data as possible, we introduce batch hill-climbing, an add-on to ES-MAML based on previous work for zeroth-order blackbox optimization. Rather than performing hill-climbing which iteratively updates the input one-by-one according to a deterministic objective, batch hill-climbing samples a parallel batch of queries to determine the next input, making it robust to large amounts of noise in the objective.

We then test our method on the following 2 tasks, which are designed to significantly change the dynamics from the normal setting of the robot:

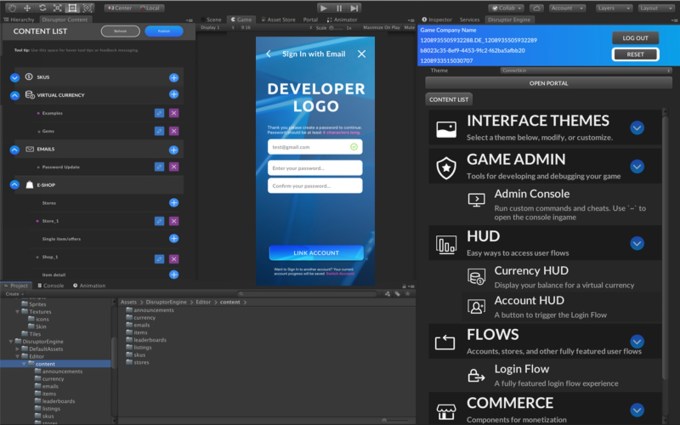

|

| Qualitative changes during the adaptation phase under the mass-voltage task. |

|

|

| Comparisons between Domain Randomization and PG-MAML, and metric differences between our method’s meta-policy and adapted policy. Top: Comparison for the mass-voltage task. Our method stabilizes the robot’s roll angle. Bottom: Comparison for the friction task. Our method results in longer trajectories. |

This work exposes several avenues for future development. One option is to make algorithmic improvements to reduce the number of real-world rollouts required for adaptation. Another area for advancement is the use of model-based reinforcement learning techniques for a lifelong learning system, in which the robot can continuously collect data and quickly adjust its policy to learn new skills and to operate optimally in new environments.

Acknowledgements

This research was conducted by the core ES-MAML team: Xingyou Song, Yuxiang Yang, Krzysztof Choromanski, Ken Caluwaerts, Wenbo Gao, Chelsea Finn, and Jie Tan. We would like to give special thanks to Vikas Sindhwani for his support on ES methods, and Daniel Seita for feedback on our paper.