YouTube today announced a series of changes designed to give users more control over what videos appear on the Homepage and in its Up Next suggestions — the latter which are typically powered by an algorithm. The company also says it will offer more visibility to users as to why they’re being shown a recommended video — a peek into the YouTube algorithm that wasn’t before possible.

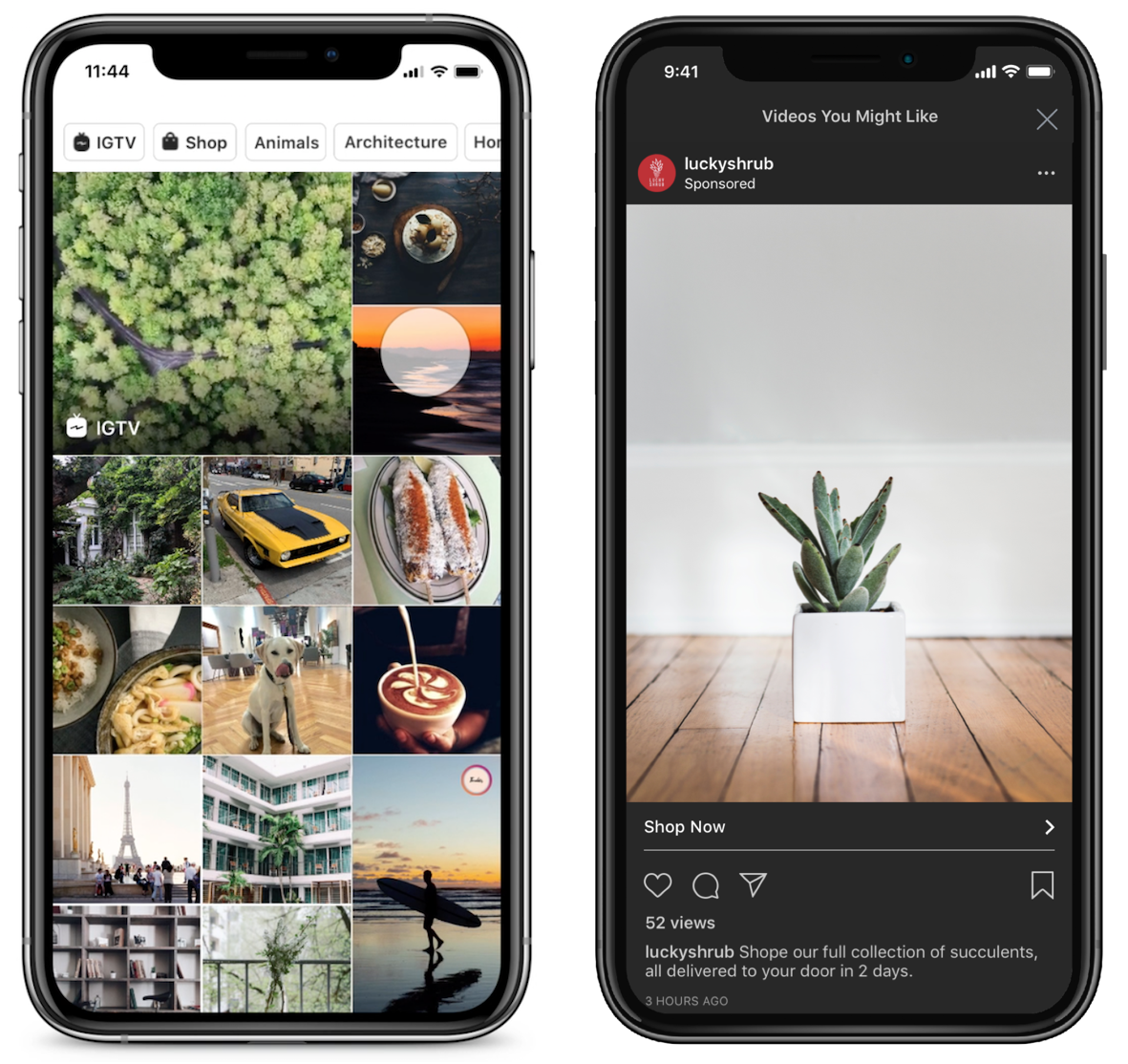

One new feature is designed to make it easier to explore topics and related videos from both the YouTube Homepage and in the Up Next video section. The app will now display personalized suggestions based on what videos are related to those you’re watching, videos published by the channel you’re watching, or others that YouTube thinks will be of interest.

This feature is rolling out to signed-in users in English on the YouTube app for Android and will be available on iOS, desktop and other languages soon, the company says.

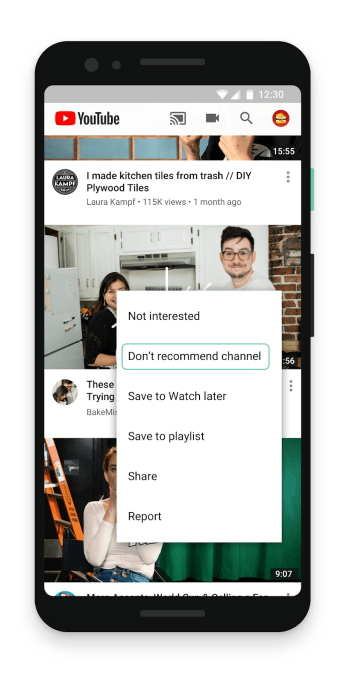

If YouTube’s suggestions aren’t right — and they often aren’t — users will now be able to access controls that explicitly tell the service to stop suggesting videos from a particular channel.

This will be available from the three-dot menu next to a video on the Homepage or Up Next. From there, you’ll click “Don’t recommend channel.” From that point forward, no videos from that channel will be shown.

However, you’ll still be able to see the videos if you Subscribe, do a search for them, or visit the Channel page directly — they aren’t being hidden from you entirely, in other words. The videos may also still appear on the Trending tab, at times.

This feature is available globally now on the YouTube app for Android and iOS starting today, and will be available on desktop soon.

Lastly, and perhaps most notably, YouTube is giving users slight visibility into how its algorithm works.

Before, people may not have understood why certain videos were recommended to them. Another new feature will detail the reasons why a video made the list.

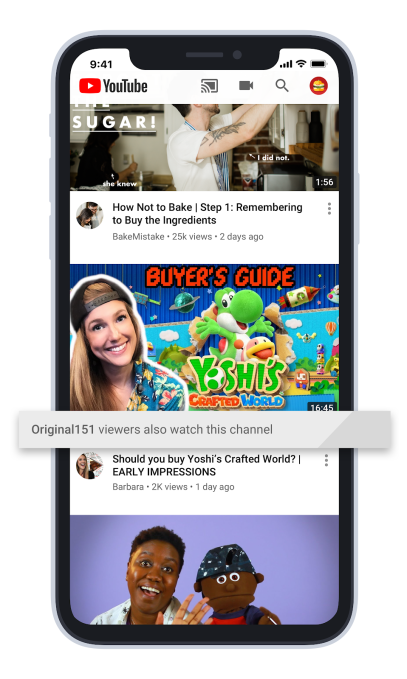

Now, underneath a video suggestion, YouTube will say why it was selected.

“Sometimes, we recommend videos from channels you haven’t seen before based on what other viewers with similar interests have liked and watched in the past,” explains the company in its announcement. ” Our goal is to explain why these videos surface on your homepage in order to help you find videos from new channels you might like,” says YouTube.

For example, the explanation might say that viewers who also watch one of your favorite channels also watch the channel that the video recommendation is coming from.

YouTube’s algorithm is likely far more complex than just “viewers who like this also like that,” but it’s a start, at least.

This feature is launching globally on the YouTube app for iOS today, and will be available on Android and desktop soon.

The changes come at a time when YouTube — and other large social media companies — are under pressure from government regulators over how they manage their platforms. Beyond issues around privacy and security, the spread of hate speech and disinformation, platform providers are also being criticized for their reliance on opaque algorithms that determine what is shown to their end users.

YouTube, in particular, came under fire for how its own Recommendations algorithm was leveraged by child predators in the creation of pedophilia wormhole. YouTube responded by shutting off the comments on kids’ videos where the criminals were sharing timestamps. But it stopped there.

“The company refused to turn off recommendations on videos featuring young children in leotards and bathing suits even after researchers demonstrated YouTube’s algorithm was recommending these videos to pedophiles,” wrote consumer advocacy groups in a letter to the FTC this week, urging the agency to take action against YouTube to protect children.

The FTC hasn’t commented on its investigation, as per policy, but confirmed it received the letter.

Explaining to end users how Recommendations work is only part of the problem.

The other issue that YouTube’s algorithm can end up creating “filter bubbles,” which can lead users to down dark paths, at times.

For instance, a recent story in The New York Times detailed how a person who came to YouTube for self-help videos was increasingly shown ever more radical and extremist content, thanks to the algorithm’s recommendations which pointed him to right-wing commentary, then to conspiracy videos, and finally racist content.

The ability to explicitly silence some YouTube recommendations may help those who care enough to control their experience, but won’t likely solve the problem of those who just follow the algorithm’s suggestions. However, if YouTube were to eventually use this as a new signal — a downvote of sorts — it could influence the algorithm in other more subtle ways.

Read Full Article