Two former product wizards from Facebook and Google are combining Silicon Valley’s buzziest buzz words –search, artificial intelligence, and big data — into a new technology service aimed at solving nothing less than the problem of how to provide professional meaning in the modern world.

Founded by chief executive Ashutosh Garg, a former search and personalization expert at Google and IBM research, and chief technology officer Varun Kacholia, who led the ranking team at Google and YouTube search and the News Feed team at Facebook, Eightfold.ai boasts an executive team that has a combined eighty patents and over 6,000 citations for their research.

The two men have come together (in perhaps the most Silicon Valley fashion) to bring the analytical rigor that their former employers are famous for to the question of how best to help employees find fulfillment in the workforce.

“Employment is the backbone of society and it is a hard problem,” to match the right person with the right role, says Garg. “People pitch recruiting as a transaction… [but] to build a holistic platform is to build a company that fundamentally solves this problem,” of making work the most meaningful to the most people, he says.

It’s a big goal and it’s backed $24 million in funding provided by some big time investors — Lightspeed Ventures and Foundation Capital.

The company’s executives say they want to wring all of the biases out of recruiting, hiring, professional development and advancement by creating a picture of an ideal workforce based on publicly available data collected from around the world. That data can be parsed and analyzed to create an almost Platonic ideal of any business in any industry.

That image of an ideal business is then overlaid on a company’s actual workforce to see how best to advance specific candidates and hire for roles that need to be filled to bring a business closer in line with its ideal.

“We have crawled the web for millions of profiles… including data from wikipedia,” says Garg. “From there we have gotten data round how people have moved in organizations. We use all of this data to see who has performed well in an organization or not. Now what we do… we build models over this data to see who is capable of doing what.”

There are two important functions at play, according to Garg. The first is developing a talent network of a business — “the talent graph of a company”, he calls it. “On top of that we map how people have gone from one function to another in their career.”

Using those tools, Garg says Eightfold.ai’s services can predict the best path for each employee to reach their full potential.

The company takes its name from Buddhism’s eightfold path to enlightenment, and while I’m not sure what the Buddha would say about the conflation of professional development with spiritual growth, Garg believes that he’s on the right track.

“Every individual with the right capability and potential placed in the right role is meaningful progress for us,” says Garg.

Eightfold.ai already counts over 100 customers using its tools across different industries. It’s software has processed over 20 million applications to-date, and increased response rates among its customers by 700 percent compared to the industry average all while reducing screening costs and time by 90 percent, according to a statement.

“Eightfold.ai has an incredible opportunity to help people reach their full potential in their careers while empowering the workforces of the future,” said Peter Nieh, a partner at Lightspeed Ventures in a statement. “Ashutosh and Varun are bringing to talent management the transformative artificial intelligence and data science capability that they brought to Google, YouTube and Facebook. We backed Ashutosh previously when he co-founded BloomReach and look forward to partnering with him again.”

The application of big data and algorithmically automated decision making to workforce development is a perfect example of how Silicon Valley approaches any number of problems — and with even the best intentions, it’s worth noting that these tools are only as good as the developers who make them.

Indeed, Kacholia and Garg’s previous companies have been accused on relying too heavily on technology to solve what are essentially human problems.

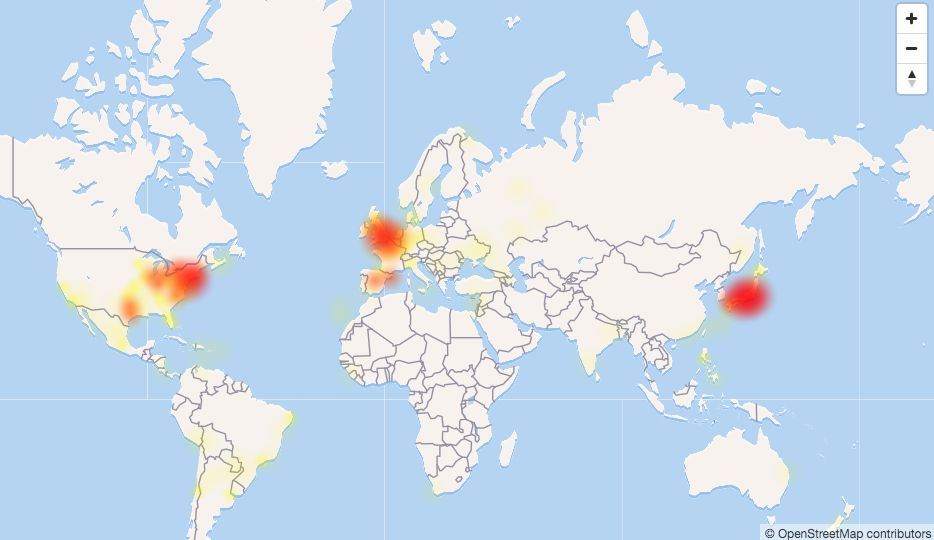

The proliferation of propaganda, politically-minded meddling by foreign governments in domestic campaigns, and the promotion of hate speech online has been abetted in many cases by the faith technology companies like Google and Facebook have placed in the tools they’ve developed to ensure that their information and networking platforms function properly (spoiler alert: they’re not).

And the application of these tools to work — and workforce development — is noble, but should also be met with a degree of skepticism.

As an MIT Technology Review article noted from last year,

Algorithmic bias is shaping up to be a major societal issue at a critical moment in the evolution of machine learning and AI. If the bias lurking inside the algorithms that make ever-more-important decisions goes unrecognized and unchecked, it could have serious negative consequences, especially for poorer communities and minorities. The eventual outcry might also stymie the progress of an incredibly useful technology (see “Inspecting Algorithms for Bias”).

Algorithms that may conceal hidden biases are already routinely used to make vital financial and legal decisions. Proprietary algorithms are used to decide, for instance, who gets a job interview, who gets granted parole, and who gets a loan.

“Many of the biases people have in recruiting stem from the limited data people have seen,” Garg responded to me in an email. “With data intelligence we provide recruiters and hiring managers powerful insights around person-job fit that allows teams to go beyond the few skills or companies they might know of, dramatically increasing their pool of qualified candidates. Our diversity product further allows removal of any potential human bias via blind screening. We are fully compliant with EEOC and do not use age, sex, race, religion, disability, etc in assessing fit of candidates to roles in enterprises.”

Making personnel decisions less personal by removing human bias from the process is laudable, but only if the decision-making systems are, themselves, untainted by those biases. In this day and age, that’s no guarantee.

Read Full Article

In order to do this for their prototyping stage, they jury-rigged a solution from “wood, bicycle parts, and I think a sewing machine engine,” he said. “We had to put that together on the spot to keep costs down. We kind of replicated what we knew was already out there to test our materials and concepts. We knew if we could make this work, we just had to build or find a better one.”

In order to do this for their prototyping stage, they jury-rigged a solution from “wood, bicycle parts, and I think a sewing machine engine,” he said. “We had to put that together on the spot to keep costs down. We kind of replicated what we knew was already out there to test our materials and concepts. We knew if we could make this work, we just had to build or find a better one.”

It’s called Crypto Rider, predictably, and is very much a spawn of the popular Line Rider type of game, though (hopefully) different enough that there won’t be any cease and desists forthcoming.

It’s called Crypto Rider, predictably, and is very much a spawn of the popular Line Rider type of game, though (hopefully) different enough that there won’t be any cease and desists forthcoming.